Because, when using “instanceTo”/“instanceUnderNode”, there is only one copy of the referenced mesh.

You see, those methods work, as I recall, by creating node-hierarchies in which multiple nodes end up pointing to the same underlying mesh. The renderer, working its way through the hierarchy, ends up visiting that single mesh more than once, and thus renders it more than once–but with transforms influenced by the nodes along that specific path through the hierarchy, thus resulting in a different final transform.

Thus there is just one geom–and the resources consumed by just one geom–even though the mesh appears multiple times in the scene.

That is one way of doing it–and, I would guess, a faster way.

Still, “instanceTo”/“instanceUnderNode” should, I now realise, offer some improvement in performance. I’d expect said approach to perform somewhere between the naive, presumably-slow approach of multiple meshes and the presumably-very-fast approach of shader-based instancing.

Oh, node-count can have a big effect on performance.

Indeed, keeping the number of nodes visible at any given moment to a minimum can be an important optimisation!

I mean, with the “instanceTo”/“instanceUnderNode” approach, you do still have intermediary nodes that might be used to move or hide individual cubes.

I wouldn’t entirely write off either the “instanceTo”/“instanceUnderNode” approach.

Or–as I mentioned above–the “RigidBodyCombiner” approach.

(I also mentioned MeshDrawer–but that’s essentially a procedural-geometry approach, which is what you were referring to.)

All the above discussion said, this is a testable hypothesis: put together a small program that can be easily configured to construct a bunch of cubes either naively (i.e. loading each as a separate mesh) or via “instanceTo”, and then see what sort of frame-time you get with each.

[edit]

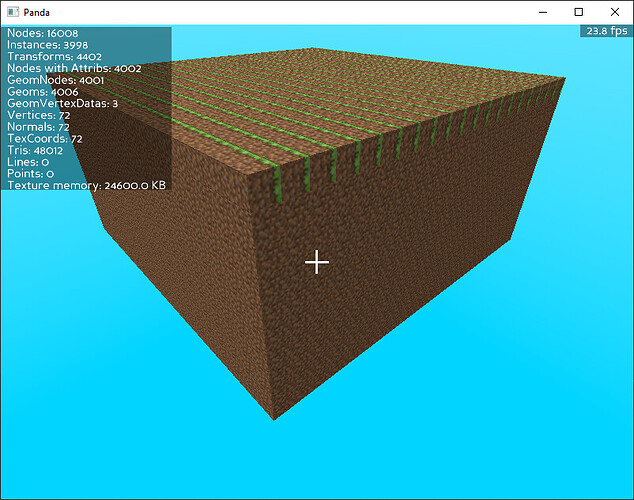

In fact, I decided to do just that:

Here below is the program that I whipped up–please do critique if I’ve missed something!

from panda3d.core import loadPrcFile, loadPrcFileData

loadPrcFileData("", "show-frame-rate-meter #t")

loadPrcFileData("", "frame-rate-meter-milliseconds #t")

from direct.showbase.ShowBase import ShowBase

USE_INSTANCE_TO = True

class Game(ShowBase):

def __init__(self):

ShowBase.__init__(self)

if USE_INSTANCE_TO:

self.baseModel = loader.loadModel("sphere")

generationMethod = self.generateByInstanceTo

else:

generationMethod = self.generateByNaiveLoading

for i in range(100):

for j in range(100):

np = generationMethod()

np.setPos((i - 50) * 2, 400, (j - 50) * 2)

def generateByInstanceTo(self):

np = self.render.attachNewNode("mew")

self.baseModel.instanceTo(np)

return np

def generateByNaiveLoading(self):

np = loader.loadModel("sphere")

np.reparentTo(render)

return np

app = Game()

app.run()

As to the results…

Indeed, it seems that there’s little to no difference in performance for a static scene!

(Although a quick-and-dirty test with the RigidBodyCombiner suggested that it did help significantly–albeit as long as the vertex-count per object was kept low. But then, if we’re talking about cubes, then that is the case…)