Hi,

I am trying to render large 3D transparent surface meshes (with more than 50K vertices, and 100K faces). Particularly, every vertex has an associated alpha that is stored in the RGBA format.

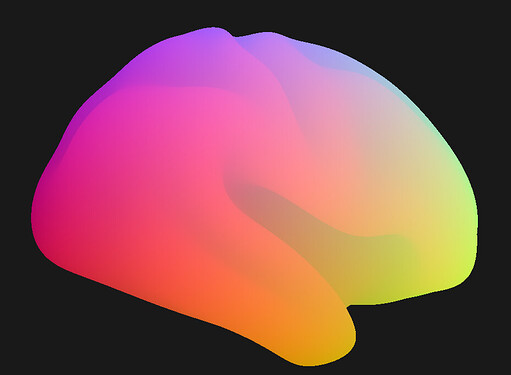

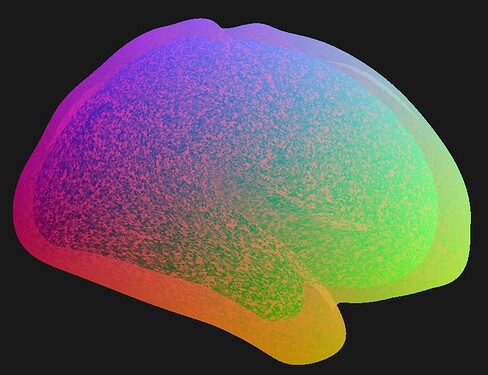

First of all, I should mention that the surfaces render perfectly without transparency:

But when adding transparency, it seems to fail to correctly identify what faces should render first. I tried reading various posts including this one but wasn’t able to resolve the issue.

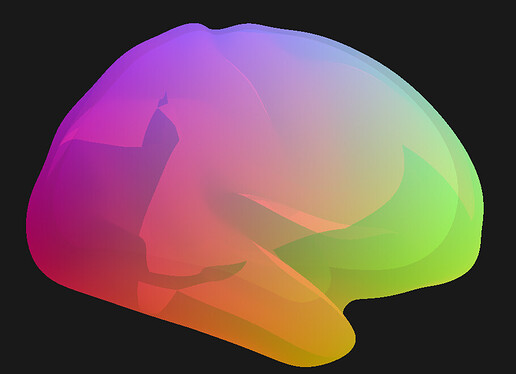

Let me give you a visual representation of what happens. If I add a simple transparency (alpha=0.7) the visualization changes to the following:

As can be seen, there are several transparency artifacts introduced to the image (ideally the image would look like a glass brain). Knowing the actual data structure of the model, I realized that the artifacts are in fact related to the order of the triangles in the mesh. Let me explain this with a couple of examples. If I change the order of triangles in my GeomTriangles to sort them along the x-axis, this is what the render looks like from the positive x direction:

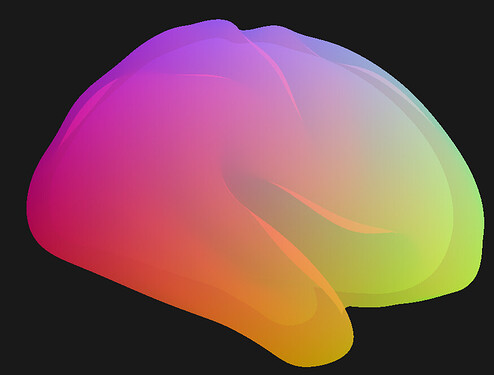

I’d say this is nearly as perfect as I would imagine. However, there’s a catch… If I rotated the camera to view the same object from the negative x direction this is what it would look like:

As it can be clearly seen the right hemisphere which was supposed to be rendered behind the left hemisphere, is actually rendered in front of it. So basically, triangles seem to be rendered according to the order they are stored in the GeomTriangles and not according to their proximity to the camera.

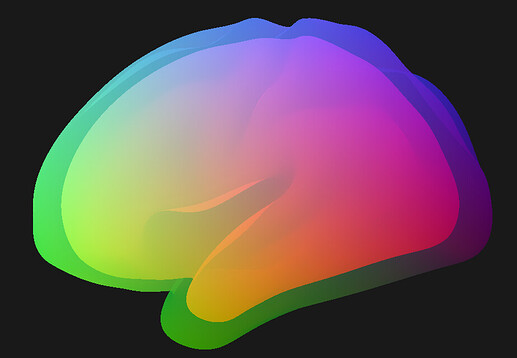

To further verify this point, I tried visualizing the same surface after randomly shuffling the triangles and this is how it looks:

It is clear how this is messing everything up!

I have tried a few other things (like disabling back face culling) to see if it helps, but nothing has worked thus far. A long while ago, I had a similar problem with Mayavi which I was able to fix by enabling depth peeling (use_depth_peeling=True)

I’m basically trying to find similar optionality in panda3d but wasn’t successful thus far. (I can see that there are ways to take depth into account as described here, but am not sure how to appropriately use it.

It would be great if you could potentially guide me in the right direction.

Thanks