Hi! New user here, been getting to know Panda3D after starting development of a game idea I had early this year on Ursina. I’m moving my codebase to be slightly more P3d-based to get at the graphics and nodepath more easily, and for the richer online documentation and support.

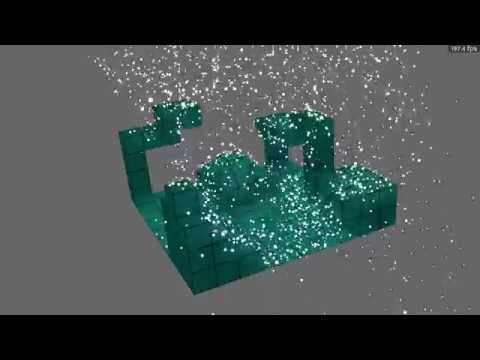

I’m building a game that’s based on a particle simulation, which involves updating the position of a large number of objects every turn. There’s no player input to worry about for most of these, as the player interacts by adding and removing atoms rather than directly moving atoms. So I’ve got an integration method running 4 iterations on my calculations every tick (the Runge-Kutte fourth order method), and each of those iterations must be done sequentially to calculate weights for the motion for the tick. However, they can all be done in parallel, as each atom doesn’t need to know what any other atom will do next in order to do what it needs to do- they all just need to know the field state at the timestep, so the last iteration or whole update tick must have completed.

I have to admit, I’m really struggling to figure out how to use tasks for this. It looks like I’ll need to use the async await keyword in my update method, but am I forced to access global variables if I want the tasks to run asynchronously? It feels messy to give each calculator access to the entire dataset of positions/velocities, but I’m stuck for any way to ‘feed’ the correct variables to the tasks.

This feels tangled up with the question of- is there a way to manually continue tasks in-between ticks? I was thinking it would make sense if there was some way to tell an entire task chain “queue all your tasks to be run, and deposit their return values in the following variables” and then “wait for all tasks to complete before repeating, but now deposit their return values here”. Every time I try to think of how to do this, all I can think of is either an absolute mess of duplicate code and extra tracing variables to manage flow, or getting tangled up in the messy polymorphism of the AsyncTask class again. I tried inheriting that class as suggested on the API, but could not get the interpreter to consider the inheriting class to be an instance of AsyncTask, and scrapped all the AsyncTask-related code.

Sorry for the absolute mess of a post, but I wanted to give as much detail as I could on what I’ve tried so far and what’s gone on. I’m mostly testing things out here (please don’t judge my learner-code too badly!): chembattle/test zone/pandaTest.py at main · gossfunkel/chembattle · GitHub