Hi to all,

At the outset, I would like to point out that while I have been dealing with Python for quite a long time (and with programming - for a very long time), I have been experimenting with Panda3D only for a few weeks.

I have 2 questions, the second arises from the first:

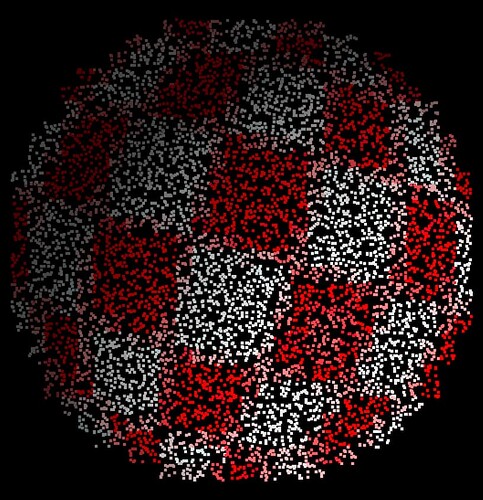

I can already load models with triangles quite efficiently, and manipulate them, illuminate them, cast shadows, etc. But I totally failed when it comes to handling point clouds in Panda3D. I note that I have already searched a lot of the Internet, including the forum, of course, but I have not found any obvious answers to the question: how to load and visualize point clouds. I tried to load point cloud files with loadModel(), but while the operation completes without errors, such a Node is sort of “empty” (for example getTightBounds() returns None). Of course I know that I could somehow figure out the format of the file storing a point cloud (for example PLY or GLTF), parse that, and then pass the vertexes to GeomPrimitive with something like addVertex(). But isn’t there a simpler loader? For example, in such Open3D there are literally 3 lines of code:

from open3d import *

cloud = open3d.io.read_point_cloud("pointcloud.ply") # Read the point cloud

open3d.visualization.draw_geometries([cloud]) # Visualize the point cloud

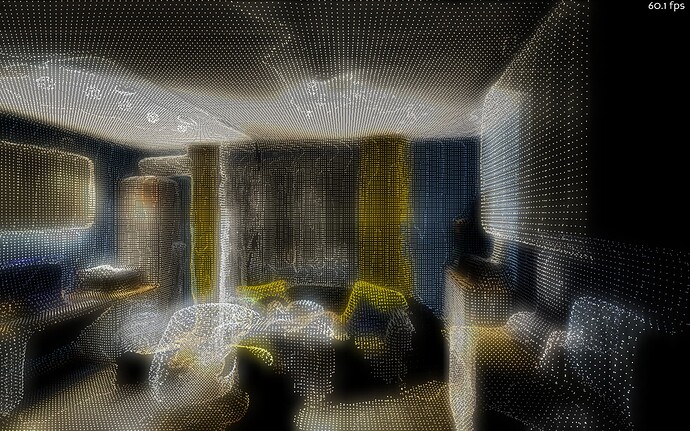

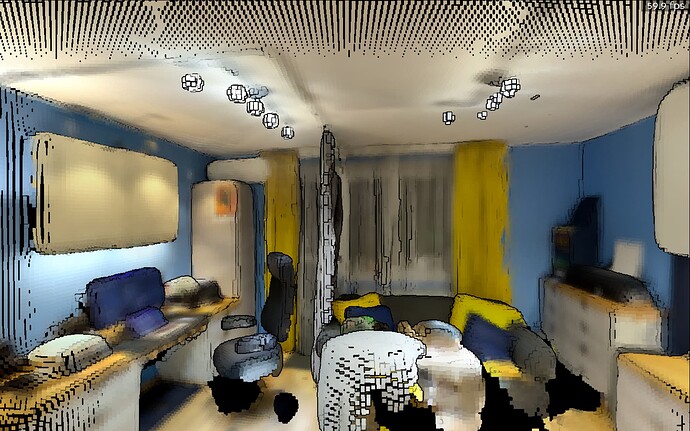

Suppose I get through the previous problem somehow. But another question arises here. Is there any possibility for the loaded point cloud to become the starting point for particles? Something like this example that is to use my point cloud instead of using Particle Factory, which would be emitted and then rendered. I know there is something like GeomParticleRenderer, but I think that’s probably not what I can use?

If this can help in any way, I will just add at the end that I generate point clouds with a Lidar from the iPhone 12 Pro (a few examples). The largest of the point clouds displayed there has 1.2 million points (and Open3D animates it smoothly on my computer), but I would like to be able to visualize larger clouds, such as even several million points (Open3D can handle a cloud of 10 million points, although not super smooth). I am also interested in handling point colors in the cloud.

Regards

Mikołaj (Miklesz)