I’ve discovered a performance issue in my current project, and I’m hoping for some aid in determining what to do about it:

In my project, I have a few shaders that, in most cases, account for a significant proportion for the pixels rendered to the screen, I believe. Now, I want my project to include lighting, so I’ve implemented that into those shaders. Specifically, any node rendered via those shaders can be affected by up to 32 lights.

Aaaand… it looks like the code for this may well be more performance-intensive than I’d prefer. :/

I’ve already tried a few small optimisations within the code itself, but to little effect thus far, I fear.

So, a few thoughts occur to me:

- I could reduce the maximum number of lights

- In fact, the previous maximum was 16. However, a recent change elsewhere prompted me to increase the average size of my rooms, meaning that more lights were called for in order to cover them…

- I could attempt to have a given node be affected only by those lights that are actually near it

- After all, while a room may have many lights, in general only a few will actually affect a given point

- However, this would mean finding a way to detect which lights are affecting which objects, potentially calling for distance-calculations or collision shenanigans

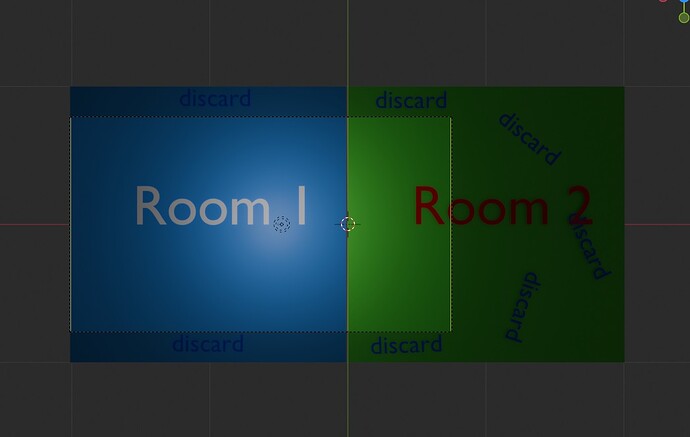

- Further, it would presumably mean cutting up my room geometry more than I currently am, increasing the number of nodes in the scene and complicating level-building

- It would also presumably incur more shader-states, as different nodes within a room would have different lights in their shader-inputs, and more changes in shader-states, as moving objects travelled into the range of different lights

And that, then, is where I stand at the moment. Thoughts…?