It’s my pleasure.

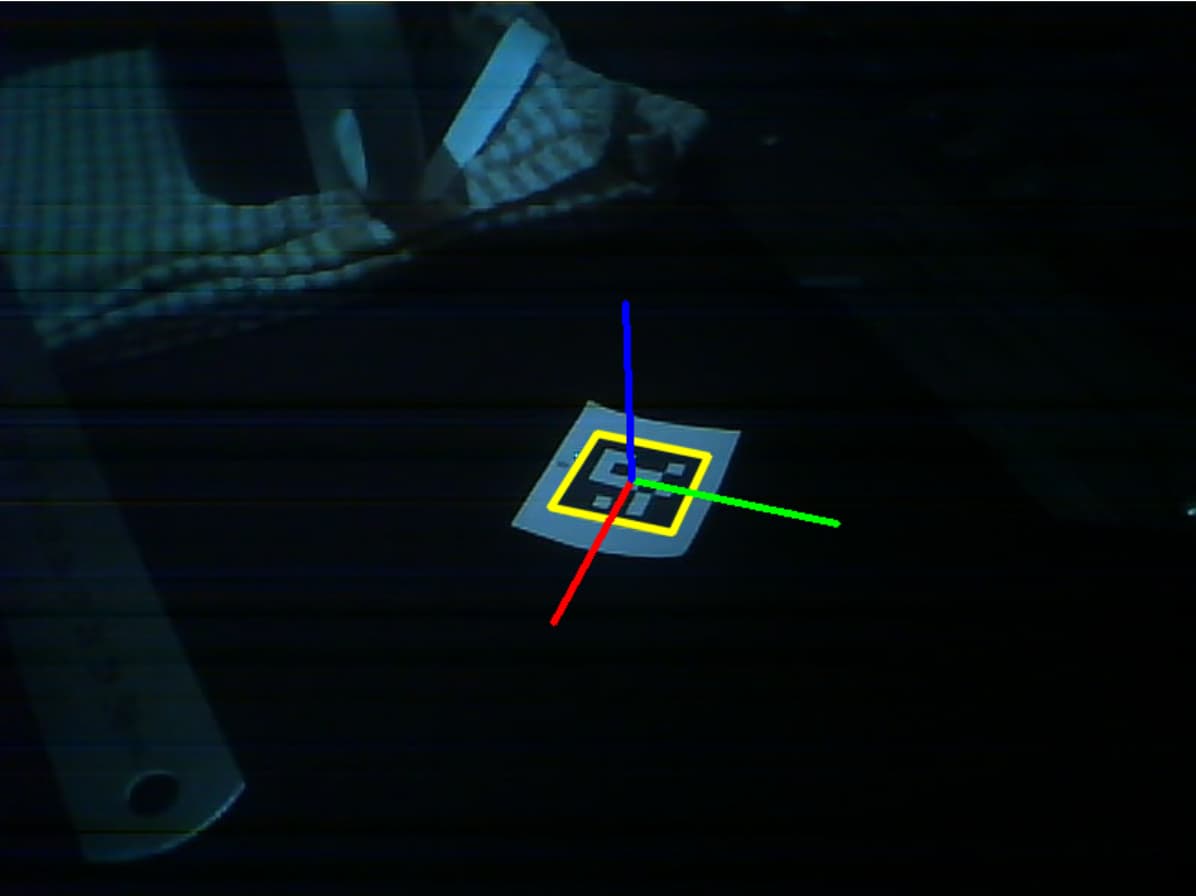

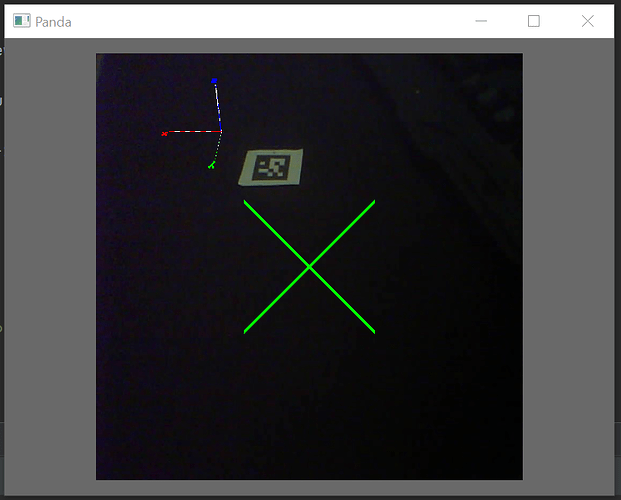

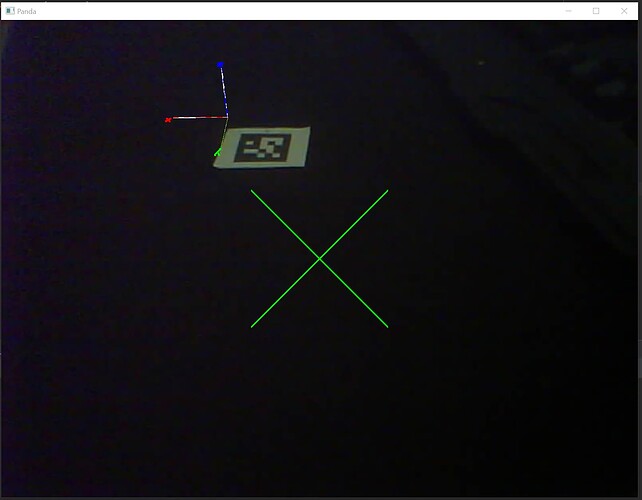

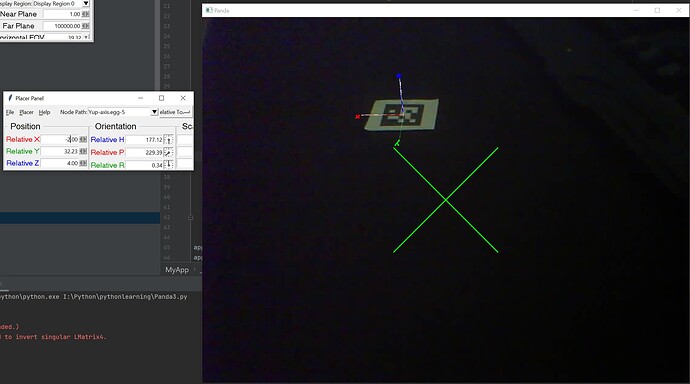

CardMaker by default places the origin of the card at the bottom-left (if I recall correctly)–hence the difference in placement.

However, this can be changed via a call to CardMaker’s “setFrame” methods. For that, see the API, starting here:

https://docs.panda3d.org/1.10/python/reference/panda3d.core.CardMaker#panda3d.core.CardMaker.setFrame

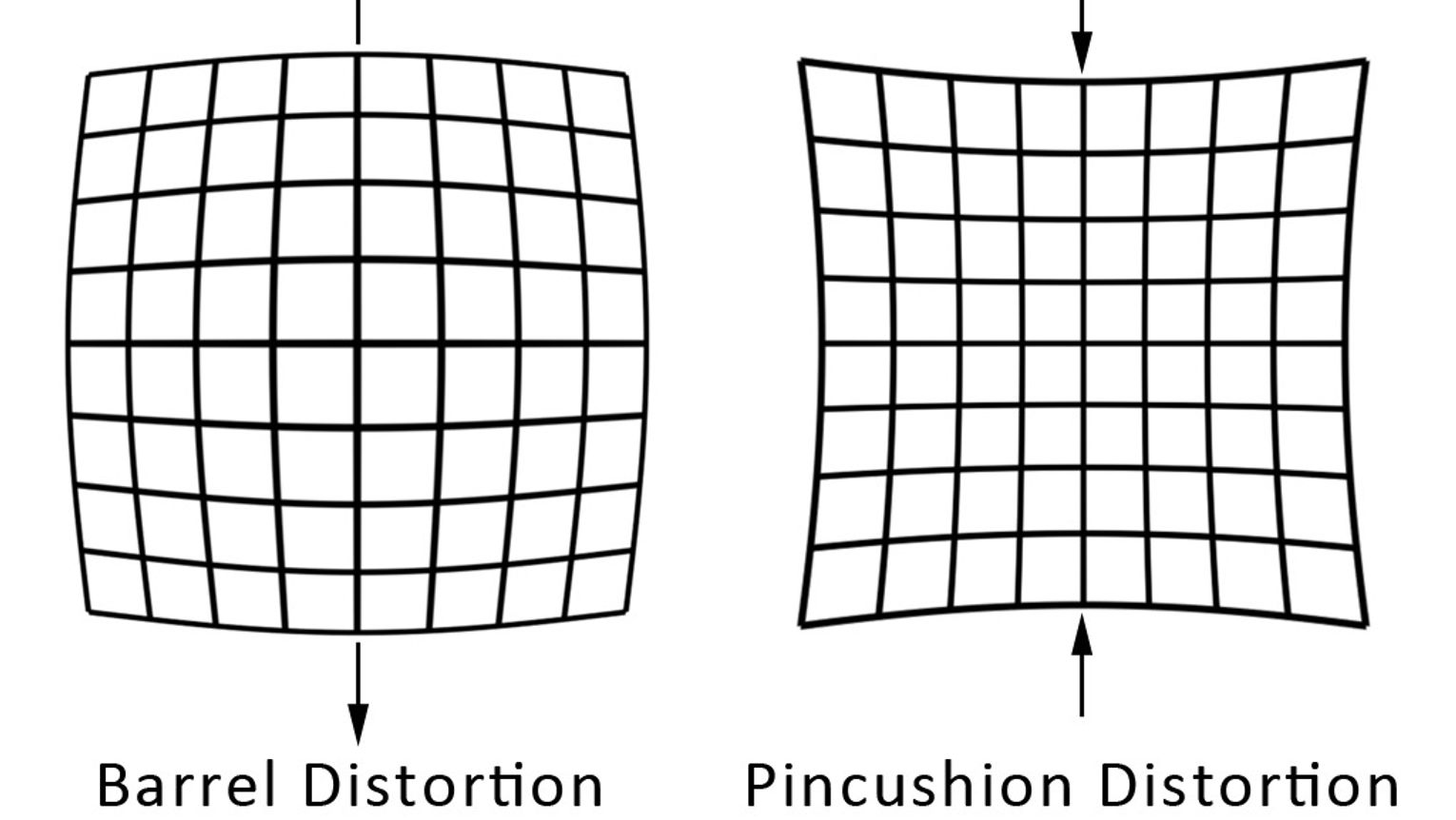

Indeed: you’ve set the aspect ratio of the window, but not of the card. That’s what I meant when I referred to scaling the quad (i.e. card) accordingly: by scaling the card non-uniformly–i.e. with different scaling factors for its width and height–we can alter the aspect ratio of the card.

Once again, scaling the card should help, I believe. The trick is to determine exactly how much to scale it…

If your window will never change aspect ratio, then the can perhaps just experiment with scaling-factors until you get it to pretty much fit.

If, however, you do intend to allow the window to change aspect ratio, then things get a little more complicated. I’m rather tired as I type this, so I fear that I’ll leave that for another to attend to, for now at least.

(Well, one way might be to use some camera-related methods to find the world-space positions of the left, right, top, and bottom of the screen at a distance equal to the placement of your card, and to then calculate from those the scale to apply. But there might be a simpler way, so I’ll hold off on elaborating on that approach.)

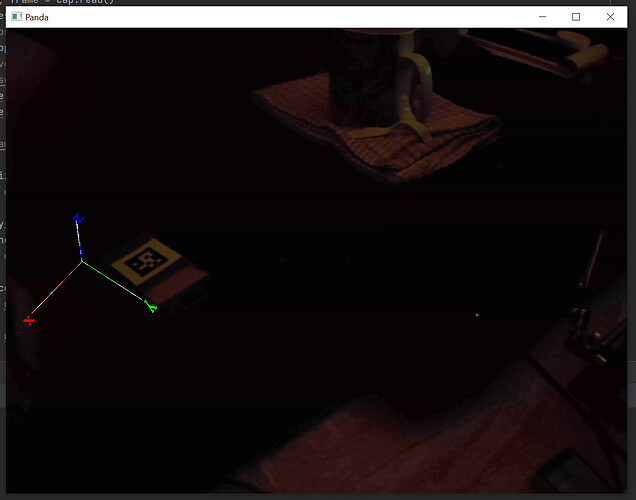

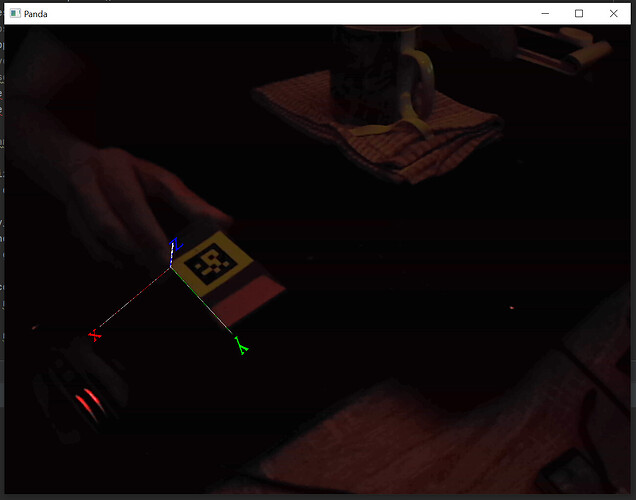

By default, I believe, objects are located at a world-position of (0, 0, 0)–and this includes the camera. As a result, the card and the camera are initially at the same location, and the camera “can’t see” the card.

Now, the camera by default points down the y-axis (the y-value being the second number in “setPos”), and thus placing the card a little distance ahead on that axis places it “in front of” the camera, allowing the camera to “see” it.

In short, this is I believe because you’re placing the object far to the right–well beyond the view of the camera.

In short, when you set the position of an object that’s attached to render (or indeed, to render2d or aspect2d), its new position is given in “world units”, not in pixels.

Quite how far a “world unit” converts to on the screen depends on a few specifics, including: which of render, render2d, or aspect2d is being used; if applicable, the setup of your camera; and, if applicable, how far away from the camera the object is.

But for a simple analogy, think of it this way:

In your code, you placed the object at a position of 2 world-units ahead of the camera on the y-axis (by setting the second number in “setPos” to “2”). So, by way of comparison, consider a scenario in which you’ve placed some object 2 metres (or feet, if you prefer) in front of yourself.

Now, imagine that, without moving or turning yourself, you then alter the object’s position to be, not just 2 metres (or feet) in front of you, but also 800 metres (or feet) to your right (equivalent in this scenario to placing your object at a position of 800 on the x-axis, via a call to “setX(800)”). In most cases this will, I daresay, result in the object being so far to your right that it will no longer be in view!

Now, there is some nuance to this that I’m glossing over, but as a starting point, the above analogy might, I hope, be illustrative of why the object disappears.