Hi y’all,

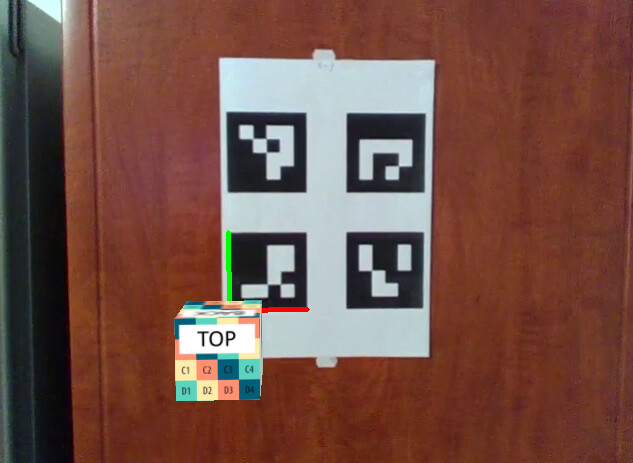

I’m working on an augmented reality python app, using openCV ArUco module to detect markers and perform pose estimation of the user camera.

Pose estimation works fine, but I’m having issues correctly converting the pose data from ArUco space coordinates to Panda space coordinates.

Here is a video where you can see the issue (rotation on the axis looks good but when combining multiple axis rotation the outcome is not correct):

I’ve made a Github repository with a working python app (cam video included, just run ‘main.py’ if interested):

Here is an excerpt of the code where the coordinate conversion happens (complete code is at Github: ‘poseEstimation.py’):

# Brief description:

# ArUco pose estimation gives us the relative position of the marker and the

# camera: Rotation vector 'rvecs' and translation vector 'tvecs'.

# They represent the transform from the marker to the camera.

#

# I want to convert the pose data to get the position of the camera in panda

# world (for now we assume the marker is at position 'x=0, y=0, z=0' in panda

# world, to make things simpler), so I can apply the pose to Panda camera

# (to mimic the pose of the real world user camera)

# Pose estimation (ArUco board):

retval, self.rvecs, self.tvecs = aruco.estimatePoseBoard( corners, ids, self.board0, self.cameraMatrix, self.distCoeffs, None, None )

# Convert rotation vector to a rotation matrix:

mat, jacobian = cv.Rodrigues(self.rvecs) # cv.Rodrigues docs: https://docs.opencv.org/master/d9/d0c/group__calib3d.html#ga61585db663d9da06b68e70cfbf6a1eac

# Transpose the matrix (following approach found at stackoverflow):

mat = cv.transpose(mat) # cv.transpose docs: https://docs.opencv.org/master/d2/de8/group__core__array.html#ga46630ed6c0ea6254a35f447289bd7404

# Invert the matrix (following approach found at stackoverflow, supposed to convert pose data from marker coordinate space to camera coordinate space):

retval, mat = cv.invert(mat) # cv.invert docs: https://docs.opencv.org/master/d2/de8/group__core__array.html#gad278044679d4ecf20f7622cc151aaaa2

# Create panda matrix so we can apply the data to a node via '.setMat()':

mat3 = Mat3()

mat3.set(mat[0][0], mat[0][1], mat[0][2], mat[1][0], mat[1][1], mat[1][2], mat[2][0], mat[2][1], mat[2][2] )

mat4 = Mat4(mat3)

# From here on, pretty much all the values are assigned by trial-and-error, to see what works and what doesn't.

# ROTATION:

# Apply pose estimation rotation matrix to a dummy node:

panda.matrixNode.setMat( mat4 )

# Apply the matrixNode HPR to another dummy node with some trial-and-error modifications:

# H=zRot, P=xRot, R=yRot

panda.transNode.setH( - panda.matrixNode.getR() ) # zRot

panda.transNode.setP( panda.matrixNode.getP() + 180 ) # xRot

panda.transNode.setR( - panda.matrixNode.getH() ) # yRot

# TRANSLATION:

# Place dummy node to a marker position in panda world coordinates (marker is at 0,0,0)

panda.transNode.setPos(0, 0, 0)

# Use translation data from pose estimation:

xTrans = self.tvecs[0][0]

yTrans = self.tvecs[1][0]

zTrans = self.tvecs[2][0]

# Apply translation with negative values, seems to work (trial-and-error):

panda.transNode.setPos(panda.transNode, -xTrans, -zTrans, yTrans)

# Assign values to be applied to panda camera position:

camX = panda.transNode.getX()

camY = panda.transNode.getY()

camZ = panda.transNode.getZ()

camH = panda.transNode.getH()

camP = panda.transNode.getP()

camR = panda.transNode.getR()

I’ve seen similar topics on this forum but none was able to fix my issue ![]()

Any help is much appreciated ![]()

Resources:

OpenCV ArUco documentation

Description of the openCV ArUco module (section ‘Pose Estimation’)

Thank you so much for taking the time to look into the issue and helping me out

Thank you so much for taking the time to look into the issue and helping me out