I am currently trying to make a multipass renderer to include tiled light culling. the steps should go as follow:

Raw depth render buffer pass (no lighting has been done yet)

↓

Feed depth buffer into compute shader and calculate tiled lights into a 16 by 16 texture

↓

Feed it into my render pipeline to efficiently cull the lights.

so far, the stages don’t seem to be executing in this order. I can’t figure out why. Every stage is running in parallel so far and I don’t know how to make every pass run one at a time.

this is some of my code so far. note that I have built some custom classes like for the frame buffer:

winx = 16

winy = 16

color_tex = p3d.Texture()

color_tex.setup_2d_texture(

winx, winy,

p3d.Texture.T_unsigned_byte,

p3d.Texture.F_rgba8

)

color_tex.setMinfilter(p3d.SamplerState.FT_nearest)

color_tex.setMagfilter(p3d.SamplerState.FT_nearest)

depth_tex = p3d.Texture()

depth_tex.setup_2d_texture(

winx, winy,

p3d.Texture.T_unsigned_byte,

p3d.Texture.F_depth_component

)

depth_tex.setMinfilter(p3d.SamplerState.FT_nearest)

depth_tex.setMagfilter(p3d.SamplerState.FT_nearest)

depth_tex.setWrapU(p3d.SamplerState.WM_clamp)

depth_tex.setWrapV(p3d.SamplerState.WM_clamp)

tex = p3d.Texture("compute_tex")

tex.setup_2d_texture(

winx, winy,

p3d.Texture.T_float,

p3d.Texture.F_rgba32

)

tex.setMinfilter(p3d.SamplerState.FT_nearest)

tex.setMagfilter(p3d.SamplerState.FT_nearest)

manager = GraphicsBuffer(application.base.win, application.base.cam)

buffer = manager.createBuffer('defered', size = (winx, winy), colortex = color_tex, depthtex = depth_tex, depthbits = True, sort = -20)

buffer.getDisplayRegion(0).setSort(-20)

buffer.setSort(-20)

buffer.setChildSort(-20)

empty = NodePath("new")

empty.setLightOff(True)

empty.setBin('fixed', -20)

state = empty.getState()

GraphicsBufferManager.cameras[0].node().setInitialState(state)

#GraphicsBufferManager.cameras[0].setShaderOff(True)

#GraphicsBufferManager.cameras[0].node().setTagStateKey("NOLIGHT")

#GraphicsBufferManager.cameras[0].node().setTagState("NOLIGHT", empty.getState())

#application.base.cam.node().setCameraMask(BitMask32.bit(0))

#GraphicsBufferManager.cameras[0].node().setCameraMask(BitMask32.bit(1))

ground.tags = ['cheese']

ground.tags.append('balls')

print('GROUND TAGS', ground.tags, ground.getTagKeys())

print("Camera:",GraphicsBufferManager.cameras[0].node())

print('light shadow sort:', light._light.getShadowBufferSort())

##COMPUTE SHADER STUFF

in_quad = Entity(model = Quad(), parent = camera.ui, texture = color_tex, scale = (0.2, 0.12), position = (-0.6, -0.4))

out_quad = Entity(model = Quad(), parent = camera.ui, texture = tex, scale = (0.2, 0.12), position = (0.2, -0.4))

compute = ComputeShaderNode('compute.glsl')

compute.np.setBin('fixed', -20)

#application.base.win.getGsg().useShader(compute.compute_shader)

print(color_tex.getFormat(), winx // 16, winy // 16)

print((color_tex.getXSize(), color_tex.getXSize()), (tex.getXSize(), tex.getYSize()))

print(depth_tex.getFormat())

compute.np.setShaderInput("tiled_texture", tex, False)

compute.np.setShaderInput("color_texture", color_tex)

compute.np.setShaderInput("depth_texture", depth_tex)

render.setShaderInput('lit_texture', tex)

render.setShaderInput('win_size2', Vec2(window.screen_resolution[0], window.screen_resolution[1]))

from panda3d.core import Mat4, LMatrix4f

compute.dispatch(winx // 8, winy // 8, 1)

def update_compute(task):

lens = application.base.cam.node().getLens()

proj_mat = lens.getProjectionMat()

inv_proj_mat = LMatrix4f(proj_mat)

inv_proj_mat.invertInPlace()

compute.np.setShaderInput('biased_inverse_projection_matrix', inv_proj_mat)

#sattr = compute.get_attrib(ShaderAttrib)

#buffer.getEngine().dispatch_compute((winx // 8, winy // 8, 1),sattr,buffer.get_gsg())

task.cont

application.base.taskMgr.add(update_compute, sort = -20)

Compute Shader Code:

#version 430

layout (local_size_x = 8, local_size_y = 8) in;

layout(rgba8, binding = 0) writeonly uniform image2D tiled_texture;

uniform sampler2D color_texture;

uniform sampler2D depth_texture;

uniform mat4 biased_inverse_projection_matrix;

uniform float camera_far;

uniform float camera_near;

uniform vec3 camera_world_position;

uniform struct p3d_LightSourceParameters {

vec4 position;

vec4 color;

vec3 attenuation;

vec3 spotDirection;

float spotExponent;

float spotCosCutoff;

sampler2DShadow shadowMap;

mat4 shadowViewMatrix;

} p3d_LightSource[10];

float shadow_caster_contrib(sampler2DShadow shadowmap, vec4 shadowpos) {

vec3 light_space_coords = shadowpos.xyz / shadowpos.w;

light_space_coords.z -= 0.01;

float shadow = texture(shadowmap, light_space_coords);

return shadow;

}

vec4 calculate_view_position(vec2 texture_coordinate, float depth_from_depth_buffer)

{

vec4 view_position = vec4(biased_inverse_projection_matrix[0][0] * texture_coordinate.x + biased_inverse_projection_matrix[3][0],

biased_inverse_projection_matrix[1][1] * texture_coordinate.y + biased_inverse_projection_matrix[3][1],

-1.0,

biased_inverse_projection_matrix[2][3] * depth_from_depth_buffer + biased_inverse_projection_matrix[3][3]);

return view_position;

}

void main() {

ivec2 pixel = ivec2(gl_GlobalInvocationID.xy);

ivec2 texSize = textureSize(depth_texture, 0);

vec2 uv = (vec2(pixel) + 0.5) / vec2(texSize);

vec4 color = texture(color_texture, uv);

vec4 depth = texture(depth_texture, uv);

float depth_distance = (2.0 * camera_near * camera_far) / (camera_far + camera_near - depth.r * (camera_far - camera_near));

//imageStore(tiled_texture, pixel, vec4(0.0, 0.0, 0.0, 1.0));

vec4 view_position = calculate_view_position(uv * 2.0 - 1.0,

depth.r * 2.0 - 1.0);

vec3 v_view_position = view_position.xyz / view_position.w;

vec3 v = normalize(-v_view_position);

for (int i = 0; i < p3d_LightSource.length(); ++i) {

vec3 lightcol = p3d_LightSource[i].color.rgb;

vec3 light_pos = p3d_LightSource[i].position.xyz - v_view_position * p3d_LightSource[i].position.w;

vec3 l = normalize(light_pos);

float spotcos = dot(normalize(p3d_LightSource[i].spotDirection), -l);

vec3 h = normalize(l + v);

float dist = length(light_pos);

vec3 att_const = p3d_LightSource[i].attenuation;

float attenuation_factor = 1.0 / max(att_const.x + att_const.y * dist + att_const.z * dist * dist, 1e-6);

float spotcutoff = p3d_LightSource[i].spotCosCutoff;

float spotexponent = p3d_LightSource[i].spotExponent;

float shadowSpot = 0.0;

float spot = smoothstep(spotcutoff, 1.0, spotcos);

float powSpot = pow(spot, max(spotexponent, 0.01));

float fallback = mix(1.0, smoothstep(spotcutoff, spotcutoff, spotcos), step(0.0, spotcutoff));

shadowSpot = mix(fallback, powSpot, step(0.0, spotexponent));

vec4 v_shadow_pos = p3d_LightSource[i].shadowViewMatrix * view_position;

float shadow_caster = shadow_caster_contrib(p3d_LightSource[i].shadowMap, v_shadow_pos);

float shadow = shadowSpot * shadow_caster * attenuation_factor;

imageStore(tiled_texture, pixel, vec4(shadow, shadow, shadow, 1.0));

break;

}

memoryBarrier();

barrier();

}

Note that GraphicsBuffer is jsut a graphics buffer creator and GraphicsBufferManager just stores the cameras and buffer textures.

The glsl code in short reconstructs the depth buffer into a view position where we can then calculate the lights.

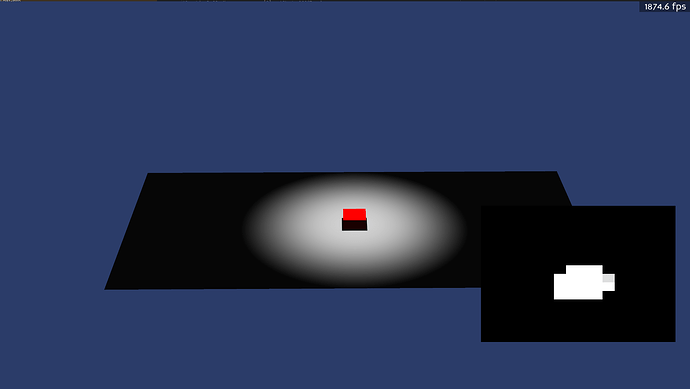

currently I’m just testing one light and writing it to a texture that is 16 by 16. the texture then gets feed into the render pipeline and checked if a light intersects that tile / fragment.

If you need information / code to figure out the issue, let me know. I just think in one message this is already too much text to read.