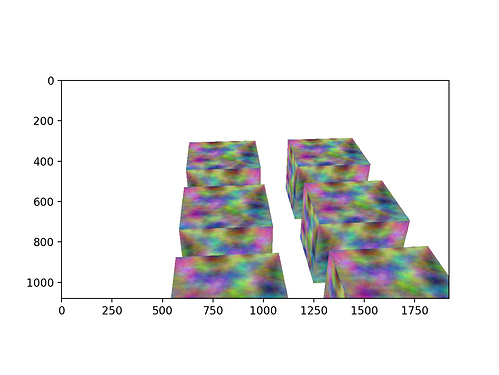

Okay, this was very helpful. I think I’ve almost got it (see the image below).

My updated fragment shader and script are below. I just have a couple more questions:

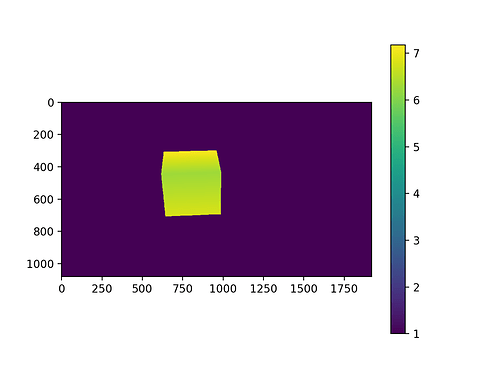

- Is this the right approach in the fragment shader, to just set the green and blue channels to 0?

- I need some way of distinguishing between true depth hits in the simulated depth camera, and when there is simply no object in range:

a. One approach would be to include a 1-bit mask alpha channel that will tell me this. Is this possible?

b. The other approach is to return a predetermined max depth value whenever there is no object in sight. I tried to do this by specifying a clear color for the texture and then clearing the texture, but it did not work (otherwise we’d see values of 20 in the depth map, instead of 1). I want to be able to have multiple depth cameras with different max depth values, so I can’t just change the global default clear color. Is there any way to achieve this?

Depth Map

Fragment Shader

#version 150

in float distanceToCamera;

out vec4 fragColor;

void main() {

fragColor = vec4(distanceToCamera, 0, 0, 1);

}

Code

import os

import numpy as np

from direct.showbase.ShowBase import ShowBase

from panda3d.core import FrameBufferProperties, WindowProperties

from panda3d.core import GraphicsOutput, GraphicsPipe

from panda3d.core import Texture, PerspectiveLens, Shader

from panda3d.core import ConfigVariableString, ConfigVariableBool, Filename, ConfigVariableManager

from panda3d.core import LVecBase4

import matplotlib.pyplot as plt

ConfigVariableString('background-color', '1.0 1.0 1.0 0.0') # sets background to white

class SceneSimulator(ShowBase):

def __init__(self):

ShowBase.__init__(self)

# set up texture and graphics buffer

window_props = WindowProperties.size(1920, 1080)

frame_buffer_props = FrameBufferProperties()

frame_buffer_props.set_float_color(True)

frame_buffer_props.set_rgba_bits(32, 0, 0, 0)

buffer = self.graphicsEngine.make_output(self.pipe,

f'Buffer',

-2,

frame_buffer_props,

window_props,

GraphicsPipe.BFRefuseWindow, # don't open a window

self.win.getGsg(),

self.win

)

texture = Texture()

texture.set_clear_color(LVecBase4(20, 20, 20, 0))

texture.clear_image()

buffer.add_render_texture(texture, GraphicsOutput.RTMCopyRam)

self.buffer = buffer

# place a box in the scene

x, y, side_length = 0, 0, 1

box = self.loader.loadModel("models/box")

box.reparentTo(self.render)

box.setScale(side_length)

box.setPos(x - side_length / 2, y - side_length / 2, 0)

# set up camera

lens = PerspectiveLens()

lens.set_film_size(1920, 1080)

lens.set_fov(45, 30)

pos = (0, 6, 4)

camera = self.make_camera(buffer, lens=lens, camName=f'Camera')

camera.reparent_to(self.render)

camera.set_pos(*pos)

camera.look_at(box)

self.camera = camera

# load shaders

vert_path = '/Users/michael/mit/sli/scene/scene/glsl_simple.vert'

frag_path = '/Users/michael/mit/sli/scene/scene/glsl_simple.frag'

custom_shader = Shader.load(Shader.SL_GLSL, vertex=vert_path, fragment=frag_path)

self.render.set_shader(custom_shader)

def render_image(self) -> np.ndarray:

self.graphics_engine.render_frame()

texture = self.buffer.get_texture()

data = texture.get_ram_image()

frame = np.frombuffer(data, np.float32)

# frame.shape = (texture.getYSize(), texture.getXSize(), texture.getNumComponents())

frame.shape = (texture.getYSize(), texture.getXSize())

frame = np.flipud(frame)

return frame

if __name__ == '__main__':

simulator = SceneSimulator()

image = simulator.render_image()

plt.imshow(image)

plt.colorbar()

plt.show()