Progress update!

I realized I was looking at the whole camera masking wrong. Instead of showing objects to a new display region and camera, I hid them from the camera. Then I compared the camera buffer texture to the window buffer. If the pixels didn’t match, It would mean that the outlined object is in that location on the screen.

Shader code as is follows:

void vshader(

float4 vtx_position : POSITION,

float4 vtx_color : COLOR,

float2 vtx_texcoord : TEXCOORD,

uniform float4x4 mat_modelproj,

out float4 l_position : POSITION,

out float4 l_color : COLOR0,

out float2 l_texcoord : TEXCOORD0)

{

l_position = mul(mat_modelproj, vtx_position);

l_color = vtx_color;

l_texcoord = vtx_texcoord;

}

void fshader(

float2 l_texcoord0 : TEXCOORD0,

out float4 o_color : COLOR,

uniform sampler2D wintex : TEXUNIT0,

uniform sampler2D cameratex : TEXUNIT1)

{

float4 camt = tex2D(cameratex, l_texcoord0);

float4 wint = tex2D(wintex, l_texcoord0);

float4 color = tex2D(wintex, l_texcoord0);

float width = 0.0002;

if(camt.x != wint.x || camt.y != wint.y || camt.z != wint.z || camt.w != wint.w){

for(int i = 0; i < 10; i++){

float4 c1 = tex2D(cameratex, l_texcoord0 + float2(width * i, 0.0));

float4 w1 = tex2D(wintex, l_texcoord0 + float2(width * i, 0.0));

float4 c2 = tex2D(cameratex, l_texcoord0 + float2(-width * i, 0.0));

float4 w2 = tex2D(wintex, l_texcoord0 + float2(-width * i, 0.0));

float4 c3 = tex2D(cameratex, l_texcoord0 + float2(0.0, width * i));

float4 w3 = tex2D(wintex, l_texcoord0 + float2(0.0, width * i));

float4 c4 = tex2D(cameratex, l_texcoord0 + float2(0.0, -width * i));

float4 w4 = tex2D(wintex, l_texcoord0 + float2(0.0, -width * i));

float4[] winarray = {w1, w2, w3, w4};

float4[] camarray = {c1, c2, c3, c4};

for(int b = 0; b < 4; b++){

float4 cc = camarray[b];

float4 ww = winarray[b];

if(cc.r == ww.r || cc.g == ww.g || cc.b == ww.b){

color = float4(1, 1, 0, 1);

break;

}

}

}

//color = float4(1, 1, 0, 1);

}

o_color = color;

}

I know that it probably isn’t the most optimized and well thought out shader but it gets the job done. The ‘wintex’ is the window texture buffer and the ‘cameratex’ is the camera texture. The for loop is used to detect if the pixel is near the outline of the model (this is how I can specify the width of the outline).

My panda3d code is for the cameras is:

self.buf = application.base.win.makeTextureBuffer('normals_buf', 0, 0)

self.buf.setClearColor(Vec4(0, 0, 0, 0))

self.camera = application.base.makeCamera(self.buf, camName='normals_camera', lens=application.base.cam.node().getLens())

self.camera.reparentTo(camera)

self.camera.node().setCameraMask(BitMask32.bit(1))

self.win_buf = application.base.win.makeTextureBuffer('win_buf', 0, 0)

self.win_buf.setClearColor(Vec4(0, 0, 0, 0))

self.win_camera = application.base.makeCamera(self.win_buf,

camName='normals_camera',

lens=application.base.cam.node().getLens())

self.win_camera.reparentTo(camera)

self.quad.set_shader_input('wintex', self.win_buf.getTexture())

self.quad.set_shader_input('cameratex', self.buf.getTexture())

I then apply the shader to a 2d quad which then does the outlining.

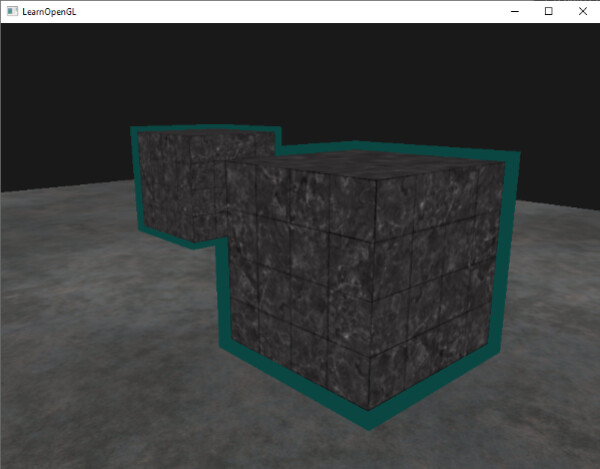

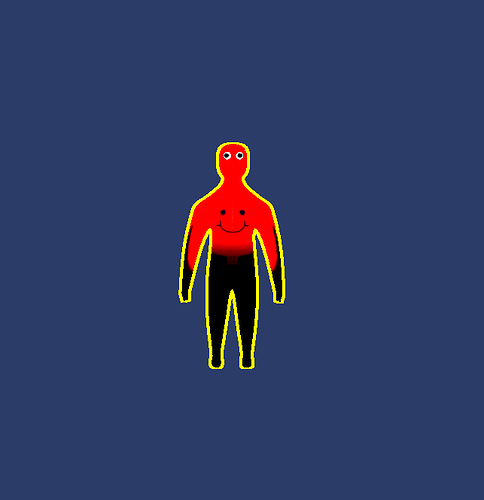

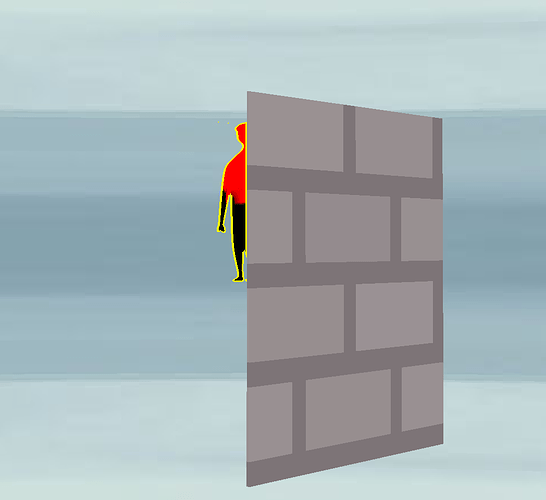

The final product looks like this:

I do think there can be some more work to do like stopping the outline from showing when the object is partially around the wall, but all in all, I think I can finally close this thread and mark this as the solution (even this is no longer about stencils). I might post more complete code / results some time later if I decide to improve this but for the current project I’m doing, I think this will do.