Guys, please help me, because I’ve been struggling with the following problem for several days and I can’t come up with a solution, although it’s probably trivial and my problem is only due to my ignorance.

I’m writing a shader that has to draw something on the already rendered image (more precisely, it’s a volumetric light - I don’t know if it’s the best way to do a volumetric light, but let’s agree that it’s a volumetric light to the best of my current capabilities). Anyway, to more or less know from which point to which point to draw in a fragment shader, I need to know the coordinates of the start and end of the vector along which I am going to draw. And of course I need to know them in the coordinate system of the fragment shader (which I’m going to run with the FilterManager on the offscreen quad). But these vector coordinates I would like to get from the “3D world”. To do this, I came up with the idea of creating two fake vertices (alternatively, some small, invisible objects), one at the object where the volumetric light streak should start, and the other at the point where the light should end. And then I will check (somehow) how their position (x, y, z) translates into coordinates on the texture (u, v).

The question is: how to do it?

Initially, I tried to check it on the offscreen quad and calculate it in the vertex shader, using multiplication by p3d_ModelViewProjectionMatrix - but it probably doesn’t make sense, because after rendering the quad, there is no entity like my fake vertex/object, there is only a quad with its four vertices in the corners of the screen - which seems to be useless.

Trying to run it on my fake vertex/object or on base.render probably doesn’t make sense either, because not only will I probably not get the coordinates in the coordinate system of the later fragment of the shader, I won’t have much of a way to extract the obtained coordinates to next (target) shader.

I would prefer not to create any special buffer or shaders for this, but (somehow) calculate the position of these 2 points, still at the Python level (such calculations for 2 points, even in slow Python, should be done very quickly), and then I will passed it as an argument to my fragment shader. Isn’t there some ready-made function for this?

You can’t use p3d_ModelViewProjectionMatrix because when Panda are rendering the fullscreen quad, it is being done with a 2D camera with orthographic projection. If you want to get access to the original version of this matrix, I can help you with the math, you would have to capture the camera and projection matrix from the main scene and pass it yourself to the shader.

How many streaks do you need to draw? Do you need to draw streaks for every pixel that is visible? The usual way to do volumetric lighting is to do implement a kind of radial blur where you sample surrounding pixels, check if they are lit, and smear them outwards, and then do this over multiple passes. This is how the volumetric lighting effect in CommonFilters works, except it does only one pass.

If you need to draw only one streak, rather than one for every pixel of the light source that is visible, then you can do the math on the CPU easily. This is how you transform an object position to clip space (-1 to 1 range on screen):

screenpos = Point2()

camera.node().getLens().project(object.getPos(camera), screenpos)

# Or if you have a position relative to a known object:

screenpos = Point2()

camera.node().getLens().project(camera.getRelativePoint(object, point), screenpos)

card.setShaderInputs(screenpos=screenpos)

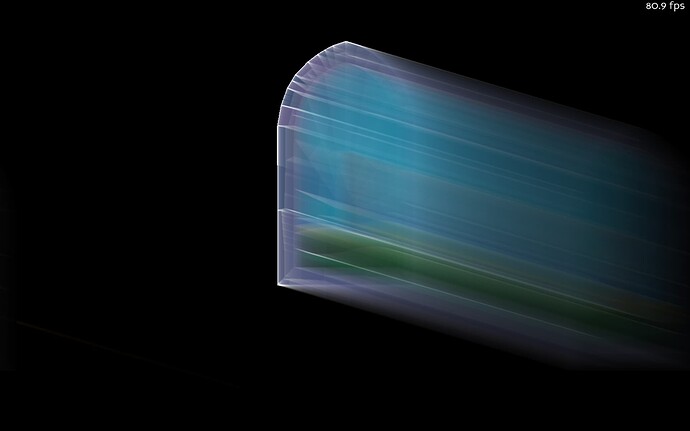

In fact, I didn’t need to access the original matrix, only to be able to recalculate the coordinates of a few points. Your code (for which I am grateful) was exactly what I needed and my volumetric light starts working (and I am able to control where from and where to it goes):

At the moment, I draw one parallel streak for each pixel, but I start drawing only when I come across an object that casts light (so far, I encode such objects with the alpha channel, which I do not use in the classic way). I tried doing it with stencils, but so far I haven’t figured it out (and the alpha channel works well). I basically came up with the whole algorithm myself. I see that a lot of people prefer multiple passes, but I try to avoid them as much as possible, because despite the higher efficiency, they make it difficult for me to learn shaders. The more so that ultimately I would like to be able to enter all the effects into one shader and combine them not necessarily in the order of processing successive passes.

Even if I end up drawing more streaks, there will still be a few streaks at most, so I think it’s something the CPU can handle.

Great, I am happy that you got it working.

If the CPU cost of transforming many points becomes an issue, instead of the code earlier, you can calculate the matrix once and then use mat.xformPoint(point) multiple times, like so:

mat = object.getMat(base.cam) * base.camLens.getProjectionMat()

pt1 = mat.xformPointGeneral(pt1)

pt2 = mat.xformPointGeneral(pt2)