Thanks for you answer.

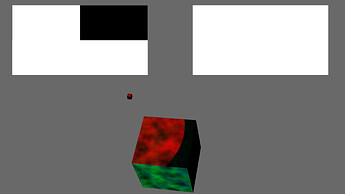

Actually, buffer 1 is displayed on cardmaker #2 (so inverted compared to the “normal” situation). Nothing displayed in cardmaker #1

Buffer1 appears on both cardmakers with layer = 0

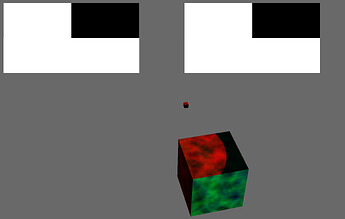

When layer = 1, no shadow buffer is displayed (which seems normal since no shadowmap #2 seems generated)

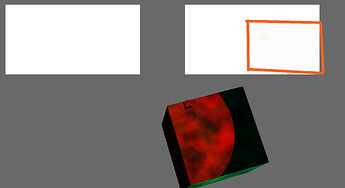

Thanks, indeed it seems pretty much simplier. I tried it but no change (shadowmap #2 does not appear):

sBuffer.addRenderTexture(self._tex_array, GraphicsOutput.RTM_bind_layered, GraphicsOutput.RTP_depth)

sBuffer.get_display_region(0).setTargetTexPage(0)

and

sBuffer2.addRenderTexture(self._tex_array, GraphicsOutput.RTM_bind_layered, GraphicsOutput.RTP_depth)

sBuffer2.get_display_region(0).disable_clears()

sBuffer2.get_display_region(0).setTargetTexPage(1)

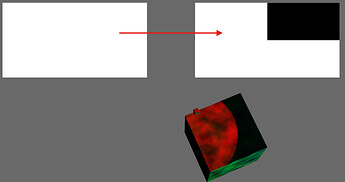

The only way to make the shadowmap #2 displayed (and probably generated??) is to deactivate the sBuffer1:

#sBuffer.addRenderTexture(self._tex_array, GraphicsOutput.RTM_bind_layered, GraphicsOutput.RTP_depth)

#sBuffer.get_display_region(0).disable_clears()

the sliced texture array is declared as a normal texture in the shader

uniform sampler2DArray texture_array;

Even if I declared it as not as a shadow texture:

self._state = SamplerState()

self._state.setMinfilter( SamplerState.FT_nearest)

self._state.setMagfilter( SamplerState.FT_linear )

self._tex_array.setDefaultSampler( self._state )

It is however associated to the sBuffer1 and SBuffer2 through the RenderTarget process with the RTP_depth field. So is tex_array automatically converted to a shadow texture? (but P3D is not complaining about this)

sBuffer.addRenderTexture(self._tex_array, GraphicsOutput.RTM_bind_layered, GraphicsOutput.RTP_depth)

One last thing in mind: to make it fast, I use the P3D shader generator to generate shadows and shades the 2 cubes. Not sure how it could interfere with all of that but:

When sBuffer#1 only is activated, a shadow is displayed on the cube

When sBuffer#2 only is activated, no shadow is displayed on the cube