Hi all,

I have a low level multistage render to texture pipeline where each step is created as its own buffer with its own texture as:

immutableTextureStore = ConfigVariableBool("gl-immutable-texture-storage", False)

immutableTextureStore.setValue(True)

# Setup the engine stuff

engine = pc.GraphicsEngine.get_global_ptr()

pipe = pc.GraphicsPipeSelection.get_global_ptr().make_module_pipe("pandagl")

texW = 512

texH = 256

# Request 8 RGB bits, 8 alpha bits, and no depth buffer.

fb_prop = pc.FrameBufferProperties()

fb_prop.setRgbColor(True)

fb_prop.setRgbaBits(8, 8, 8, 8)

fb_prop.setDepthBits(0)

# Create a WindowProperties object set to size.

win_prop = pc.WindowProperties(size=(texW, texH))

# Don't open a window - force it to be an offscreen buffer.

flags = pc.GraphicsPipe.BF_refuse_window

# Create a GraphicsBuffer to render to, we'll get the textures out of this

finalBuffer = engine.makeOutput(pipe, "Buffer", 0, fb_prop, win_prop, flags)

ftex = pc.Texture("final texture")

ftex.setup2dTexture(texW, texH, pc.Texture.T_unsigned_byte, pc.Texture.F_rgba8)

ftex.setWrapV(pc.Texture.WM_clamp)

ftex.setWrapU(pc.Texture.WM_repeat)

ftex.setClearColor((0,0,0,0))

finalBuffer.addRenderTexture(ftex, pc.GraphicsOutput.RTM_copy_ram)

def makeBuffer(textureName: str):

buffer = engine.makeOutput(pipe, "Buffer", 0, fb_prop, win_prop, flags, finalBuffer.getGsg(), finalBuffer)

btex = pc.Texture(textureName)

btex.setup2dTexture(texW, texH, pc.Texture.T_unsigned_byte, pc.Texture.F_rgba8)

btex.setWrapV(pc.Texture.WM_clamp)

btex.setWrapU(pc.Texture.WM_repeat)

btex.setClearColor((0,0,0,0))

buffer.addRenderTexture(btex, pc.GraphicsOutput.RTM_copy_ram)

return buffer, btex

# Create a scene graph, a camera, and a card to render to

cm = pc.CardMaker("card")

def makeScene(textureName: str):

buffer, btex = makeBuffer(textureName)

canvas = pc.NodePath("Scene")

canvas.setDepthTest(False)

canvas.setDepthWrite(False)

card = canvas.attachNewNode(cm.generate())

card.setZ(-1)

card.setX(-1)

card.setScale(2)

cam2D = pc.Camera("Camera")

lens = pc.OrthographicLens()

lens.setFilmSize(2, 2)

lens.setNearFar(0, 1000)

cam2D.setLens(lens)

camera = pc.NodePath(cam2D)

camera.reparentTo(canvas)

camera.setPos(0, -1, 0)

display_region = buffer.makeDisplayRegion()

display_region.camera = camera

return card, btex

card1, btex1 = makeScene("voronoi")

card2, btex2 = makeScene("boundaries")

The texture that is getting altered is originally created as so:

# Load the shader steps

voronoiShader = pc.Shader.load(pc.Shader.SL_GLSL, vertex="quad.vert", fragment="jumpflood.frag")

boundaryShader = pc.Shader.load(pc.Shader.SL_GLSL, vertex="quad.vert", fragment="boundaries.frag")

def jumpFlood(seeds, sphereXYZ: pc.Texture):

# Place the seeds in the texture

texArr = np.zeros((texH, texW, 4), dtype=np.dtype('B'))

for seed in range(min(seeds, 255)):

i = np.random.randint(0, texH)

j = np.random.randint(0, texW)

texArr[i,j,0] = (j*255) / float(texW)

texArr[i,j,1] = (i*255) / float(texH)

texArr[i,j,2] = 1 + seed

texArr[i,j,3] = 255

seedsTex = arrayToTexture("seeds", texArr, 'RGBA', pc.Texture.F_rgba8)

storeTextureAsImage(seedsTex, "seeds")

# Compute the maximum steps

N = max(texW, texH)

maxSteps = int(np.log2(N))

# Attach shader, load uniforms, and run

card1.set_shader(voronoiShader)

card1.set_shader_input("jumpflood", seedsTex)

card1.set_shader_input("sphereXYZ", sphereXYZ)

card1.set_shader_input("maxSteps", float(maxSteps))

card1.set_shader_input("texSize", (float(texW), float(texH)))

# Start jumping

for step in range(maxSteps+2):

card1.set_shader_input("level", step)

engine.renderFrame()

card1.set_shader_input("jumpflood", btex1)

return btex1

btex1 is passed out into another variable which is then passed into the function which modifies it:

voronoi = jumpFlood(seeds, sphereXYZ)

storeTextureAsImage(voronoi, "jumpflood 0")

boundaries, vbs = plateBoundaries(seeds, 2, voronoi)

The method plateBoundaries() accepts voronoi and passes it into a new shader, using a new card and a new buffer:

def plateBoundaries(seeds, smoothingSteps: int, voronoi: pc.Texture):

# Generate velocity bearings

velocityBearings = [0]*256

for i in range(min(seeds, 256)):

velocityBearings[i] = np.random.random()*2*np.pi

# Attach shader and load uniforms

card2.set_shader(boundaryShader)

card2.set_shader_input("voronoi", voronoi)

card2.set_shader_input("texSize", (float(texW), float(texH)))

card2.set_shader_input("velocityBearings", velocityBearings)

card2.set_shader_input("boundaryBearings", boundaryBearings)

# Run

engine.renderFrame()

storeTextureAsImage(voronoi, "jumpflood 0.5")

storeTextureAsImage(btex2, "boundaries")

return btex2, velocityBearings[:seeds]

The fragment shader attached to boundaryShader is 140 lines long, so I won’t paste it all here, but the only lines that interact with voronoi are:

uniform sampler2D voronoi;

...

void main() {

if (texture2D(voronoi, texcoord).a > 0.0) {

p3d_FragColor = vec4(0.0);

return;

}

...

float ourplate = texture2D(voronoi, texcoord).b;

...

float theirplate = texture2D(voronoi, texcoord+vec2(0,-up)).b;

...

theirplate = texture2D(voronoi, texcoord+vec2(-right,-up)).b;

...

// 6 more repetitions of the above

}

The code for storeTextureAsImage() is:

def storeTextureAsImage(texture: pc.Texture, filename: str):

frame = pc.PNMImage()

if not texture.hasRamImage():

engine.extractTextureData(texture,finalBuffer.get_gsg())

print (texture.getName(), "missing RAM image, attempting to extract")

print (texture.getName(), "has RAM image:", texture.hasRamImage())

print (texture.getName(), "transferred to image:", texture.store(frame))

print ("image successfully written to disk:", frame.write(path_p3d+filename+".png"), "\n")

The crux of the issue is that jumpflood 0.png and jumpflood 0.5.png are different images:

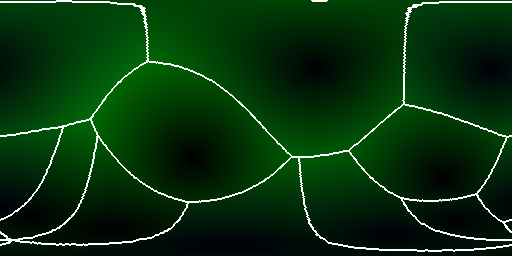

jumpflood 0.png:

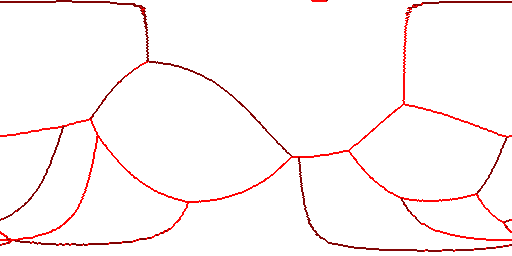

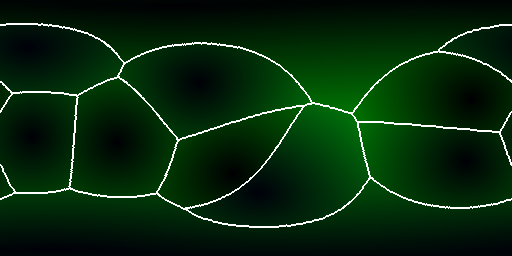

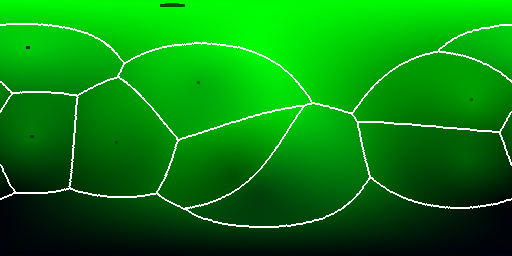

jumpflood 0.5.png:

I can’t think of any way that boundaryShader could modify a texture only passed to it as a uniform. I thought maybe a memory barrier issue, but jumpflood 0.png is written to the disk before the render call that results in jumpflood 0.5.png has even been made, before voronoi has even been passed to the shader that will be used to render jumpflood 0.5.png.

Any help is greatly appreciated! This issue has confounded me for several days now.