I apologize because I already know this is a dumb question. I am returning to opengl (and panda3d) after a bit of an absence. I’ve been googling and searching the forum here and so far can’t figure this out.

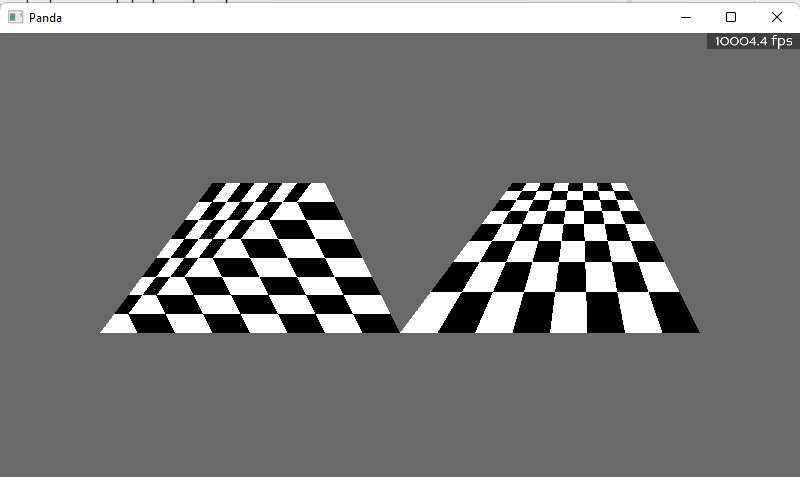

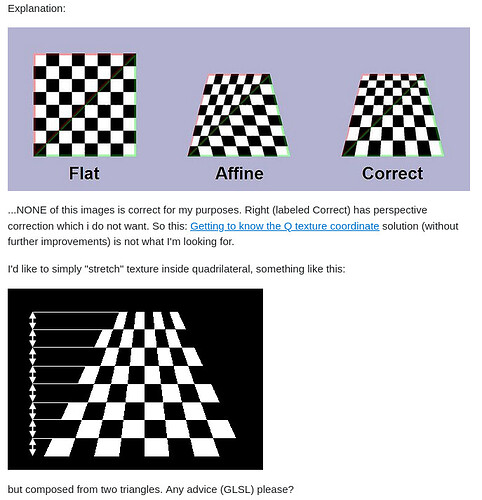

I am attempting to texture a quad with a image from a [real] camera. When I draw my quad with all the points equal distant from the viewer, I get a nice looking image (like “Flat” in the diagram below.) But I am estimating the true 3d location of the corners of the image in the world, so the corners are actually not equidistant to the viewer. Visually my corners are still approximately along the same projection vector so the shape of my quad stays the same, but when I move the corners nearer/further relative to each other I see a result similar to “Affine” in the diagram below. What I want is perspective “Correct” as in the image below.

When I google around, it seems like perspective correct texture interpolation is the default for modern graphics cards? But I am not seeing this result in my panda3d app. I haven’t been able to find any panda3d configuration or setting that speaks to enabling/disabling perspective correct texture mapping.

Is there anyone here who can point me in the right direction?

For now I’m just drawing textured triangles (not optimized tristrips/fans). I don’t think that should make any difference, but mentioning in case it does.

I’m running panda-1.10.11 on ubuntu-20.04.5 with python-3.8 (intel graphics hardware I believe … on a thinkpad x1) Edit: intel i915 graphics hardware … lsmod says the i915 kernel driver is loaded.

Am I imagining a feature that doesn’t exist in panda3d or opengl? Should this be working (and I’ve found some other way to shoot myself in the foot?) Any help or guidance or nudges or comments would be wonderful, thanks in advance!