I want to be able to render a scene ontop of an image

When working in onscreen mode, I’m using this line to load the image to the background

OnscreenImage(parent=self.scene.render2d, image=camera_frame['file_name'])

But when working in offscreen mode I’m using the CardMaker object

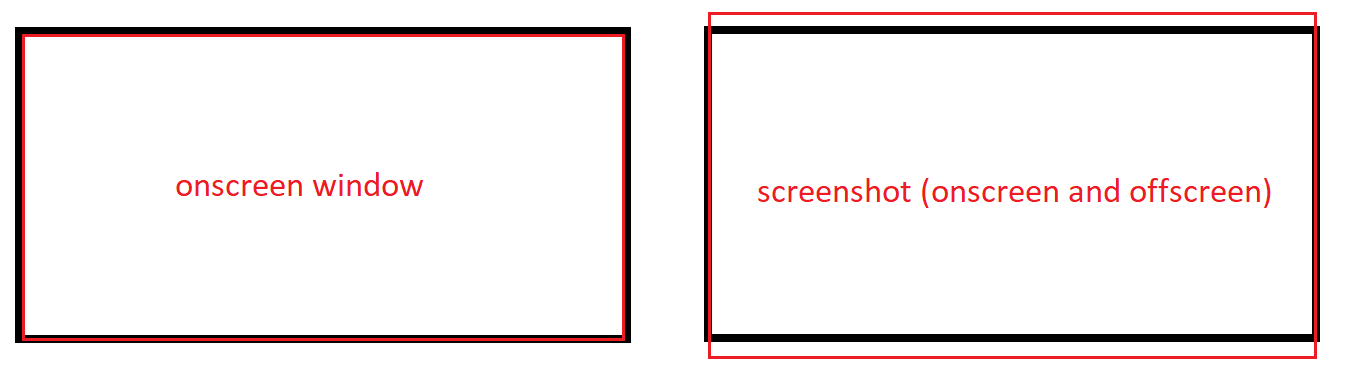

I also want to be able to save the rendered scene to a local file, so i wrote the code below that does the job but there are problems.

First one is I have to run renderFrame() twice in order for the background image to appear in the saved image

Second problems is that in offscreen mode, using base.camNode.getDisplayRegion(0) to get the display region produces a 800x600 image size, while the real image is much bigger than that

bg_tex = Texture()

bg = PNMImage()

bg.read(Filename(frame["file_name"]))

bg_tex.load(bg)

cm = CardMaker('')

card = base.render.attachNewNode(cm.generate())

card.setTexture(bg_tex, 1)

card.setTexScale(TextureStage.getDefault(), bg_tex.getTexScale())

base.graphicsEngine.renderFrame()

base.graphicsEngine.renderFrame()

dr = base.camNode.getDisplayRegion(0)

base.screenshot(source=dr)

I tried using something like

window = base.graphicsEngine.makeOutput(

base.pipe, '', 0, buffer_props, win_props,

GraphicsPipe.BFRefuseWindow

)

But I couldn’t make it work eventually