My code works great on windows machine and recently I want to try it on my Linux machine, it is very slow. After some investigation, I found it does not use GPU when do the rendering. I know it may not be a Panda3D issue, ask here to check whether someone else met the same issue and how you solve it. I tried some way, but still can’t make it work. I am on ubuntu 18.04 and installed the 460 driver with sudo apt install nvidia-driver-460. Please check more detail here: Ubuntu 19.04 Driver Installed but not Used - Graphics / Linux / Linux - NVIDIA Developer Forums.

Do other applications use the Nvidia GPU?

(This page might help, if called for:

drivers - How do I check if Ubuntu is using my NVIDIA graphics card? - Ask Ubuntu )

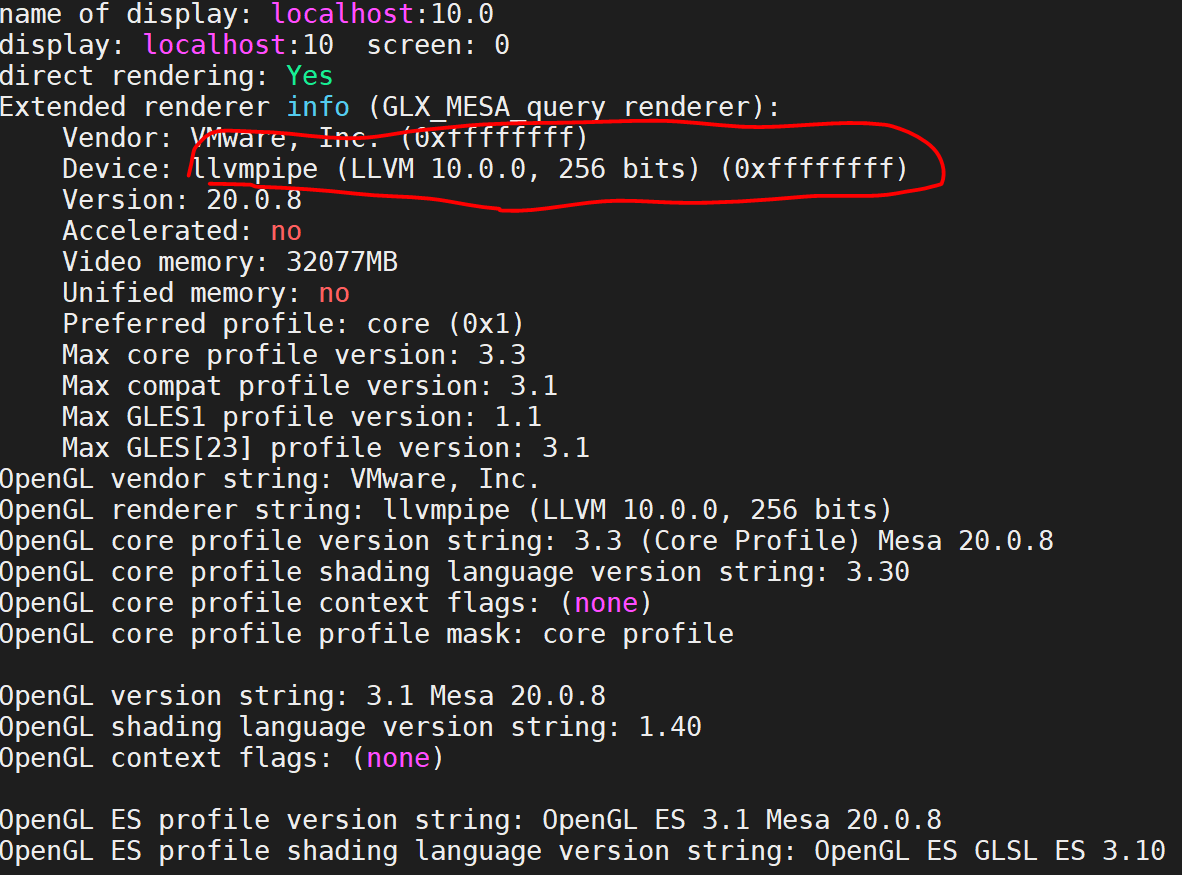

I don’t think so, below is the output of glxinfo -B, it indicates OpenGL is using software rendering, not GPU.

You are right, it should be a driver issue, but I am kind of lost now, let me check that link again.

I would recommend using the Software & Updates - Additional Drivers interface if possible. This allows a graphical selection of available NVIDIA drivers.

I see “VMWare” in the output, is this a virtual or a “real” machine?

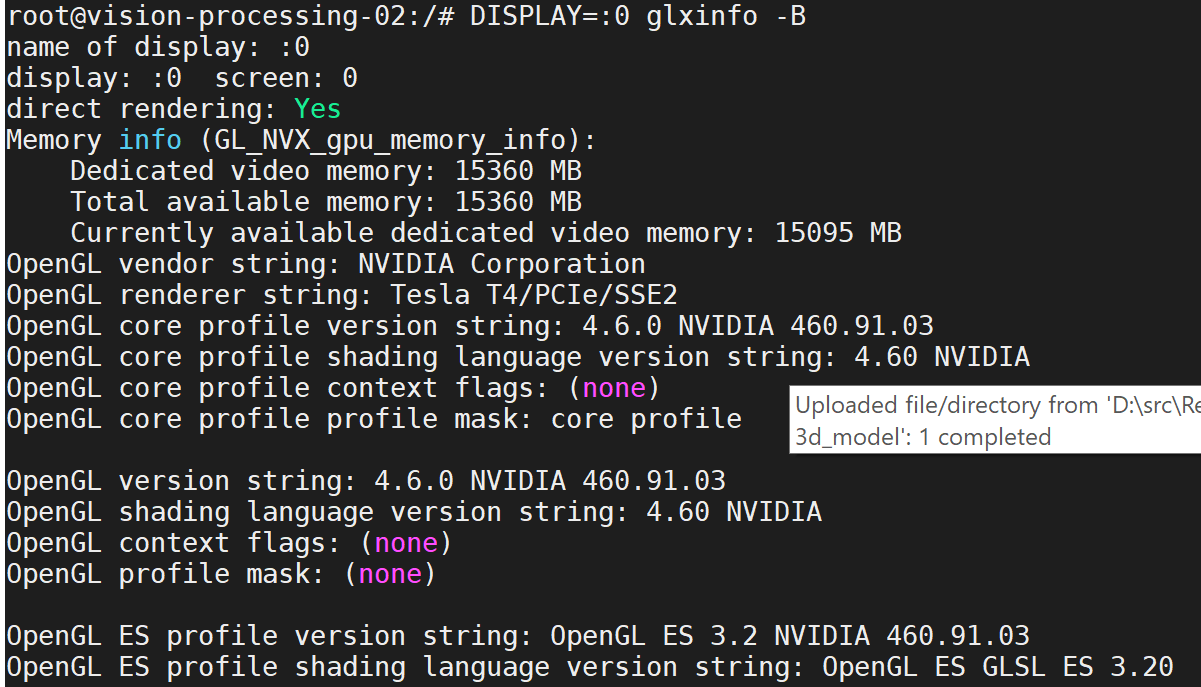

It is a real machine, but it does not have a monitor connected to it. I used MobaXterm to ssh into it. I kind of found a solution for it by creating a virtual screen with sudo nvidia-xconfig -a --use-display-device=None --virtual=1280x1024, then start X server with sudo /usr/bin/X :0 & and finally run my program with DISPLAY=:0 glxinfo -B.

But my final goal is to render without the X server on a cloud machine, I am trying the p3headlessgl mentioned in other thread, will this work?

loadPrcFileData("", "window-type offscreen")

loadPrcFileData("", "aux-display p3headlessgl")

loadPrcFileData('', 'load-display pandagl')

loadPrcFileData('', 'gl-coordinate-system default')

loadPrcFileData('', 'notify-level-gobj info')

loadPrcFileData("", "audio-library-name null") # Prevent ALSA errors

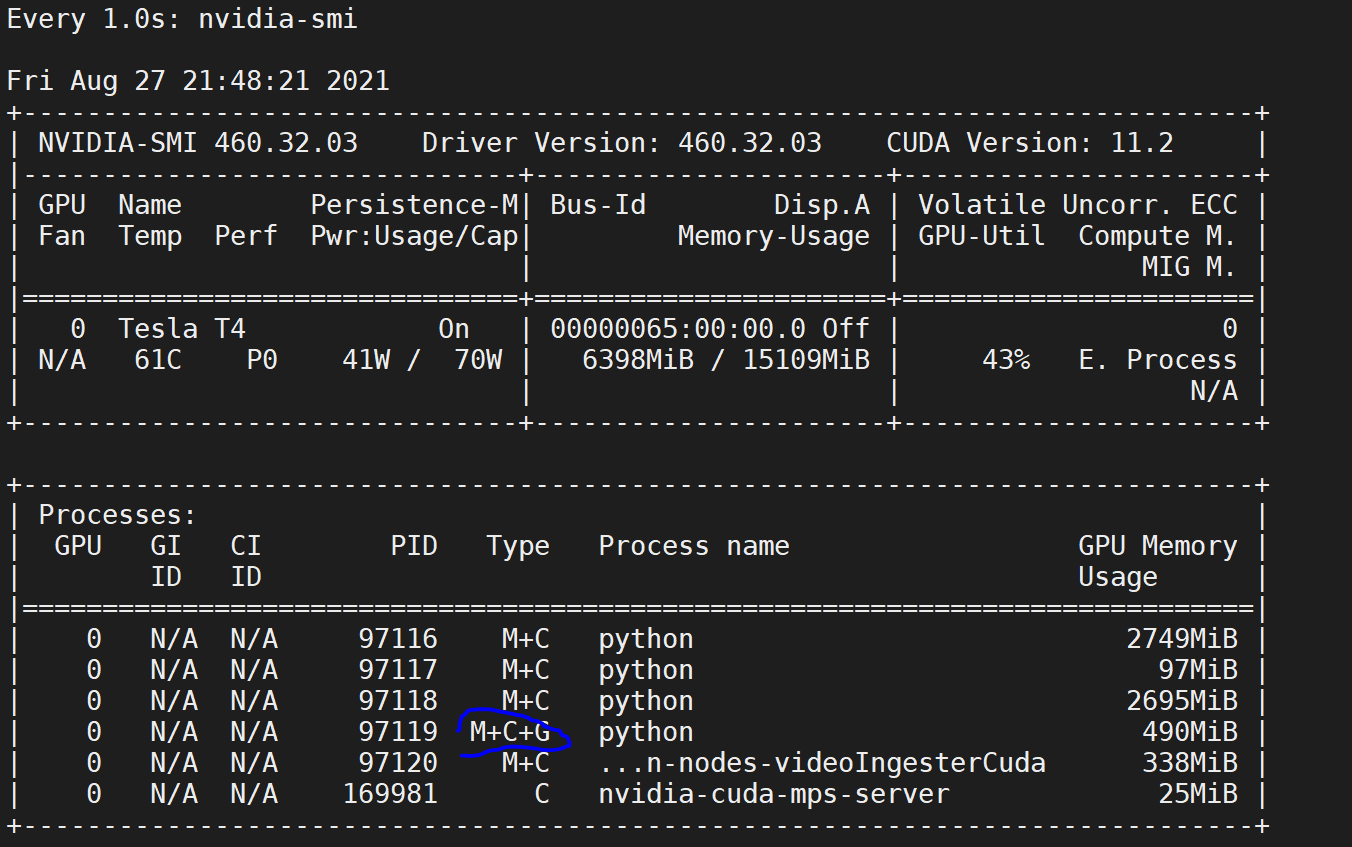

I tested on another machine without running X server with above code, the Type in nvidia-smi contains G, this means panda3D used GPU hardware to do the rendering, right?

I recommend adding notify-level-glgsg debug to the config, this will make Panda spit out info about the graphical capabilities, including which GPU is being used.