I recently activated the built-in shadow-casting in my current project, and was pleasantly surprised at how simple it is to set up.

However, I’ve encountered some issues, and would appreciate advice on how to deal with them:

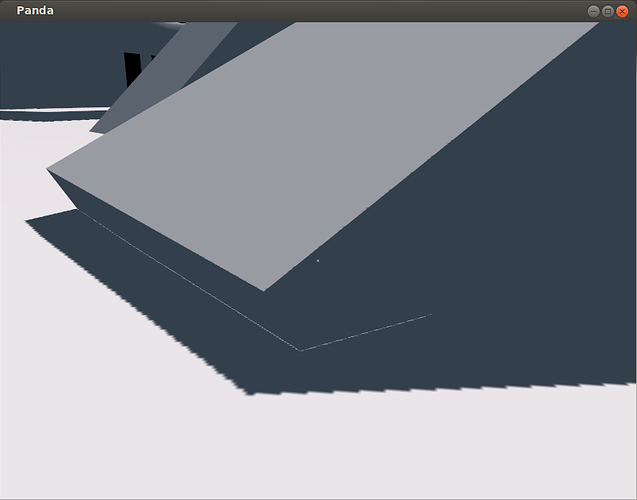

First, shadows have an unsightly line of brightness where they approach the geometry that casts them; it appears that the shadow doesn’t quite reach said geometry, leaving that region fully lit. The following screenshot should illustrate the effect:

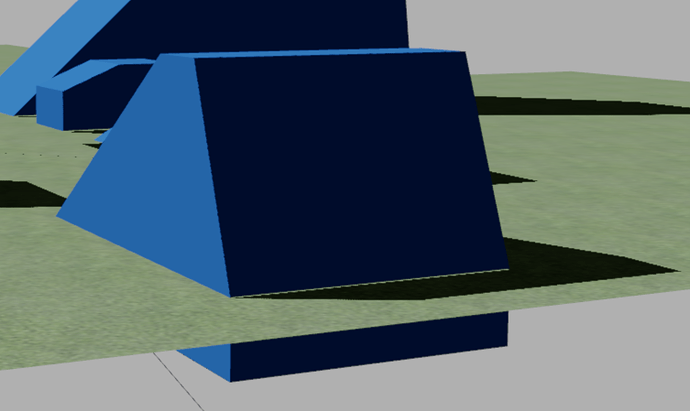

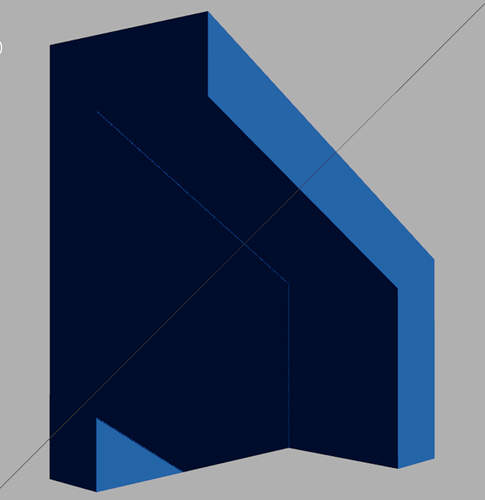

Second, I seem to have the choice of either casting shadows over a sufficiently large area that the edges aren’t too apparent, or minimising the jagged edges of the shadows. As far as I’ve thus far found, these are primarily affected by the texture-size specified when calling “setShadowCaster”, and by the film size. I’m hesitant to increase the texture-size too much–am I being overcautious? As to the film-size, increasing it results in visible jaggies, while decreasing it causes the shadows to vanish at distances that seem quite nearby.

Here is the code that I use to set up the primary light for my levels:

light = DirectionalLight("general light")

light.setColor((1.0, 0.9, 0.85, 1))

light.setShadowCaster(True, 4096, 4096)

light.getLens().setFilmSize(Vec2(30, 30))

light.getLens().setNearFar(10, 40)

lightNP = render.attachNewNode(light)

lightNP.setHpr(-45, -45, 0)

lightNP.setPos(-14, -14, 14)

self.rootNode.setLight(lightNP)

# The ambient light is set up here; I'm omitting

#it for brevity's sake.

#Elsewhere in the initialisation code:

lightNP.reparentTo(self.player.manipulator)

lightNP.setCompass()It may be worth noting that my project is not an open-world game; it’s unlikely that I’ll have shadows being cast over large vistas.

There is one point that may complicate the answer: I have in mind the idea of using vertex colours to affect the intensity of the main- and ambient- lights, but not that of the player’s “lantern” light, allowing me to produce levels with both dark and bright regions. The basic idea would be to multiply the calculated light intensity of the main- and ambient- lights by this scalar value. My best guess at how I might do so would be to dump and modify the automatically-produced shaders, then applying these manually (presuming that doing so is acceptable…?), but I’m very much open to other suggestions.

So, what should I do? Are there improvements to be made to my settings above? Features that I’m unaware of? Or should I be using some other shadowing technique, and if so, what do you recommend?

My thanks for any help given!