Here’s a new version, now with the local reflections (SSLR) filter included.

The original filter is by ninth ([url]Screen Space Local Reflections v2]); the onepass blur is adapted from wezu’s suggestion in the same thread, and the twopass blur is new.

The version integrated here is based on ssr_base.sha in SSLR_v2.zip posted by ninth in the linked thread. At least on my new Radeon R9 290 it produces better results than ssr_zfar.sha. There is some banding (visible by switching the blurring off), which I think could be due to a depth buffer precision issue.

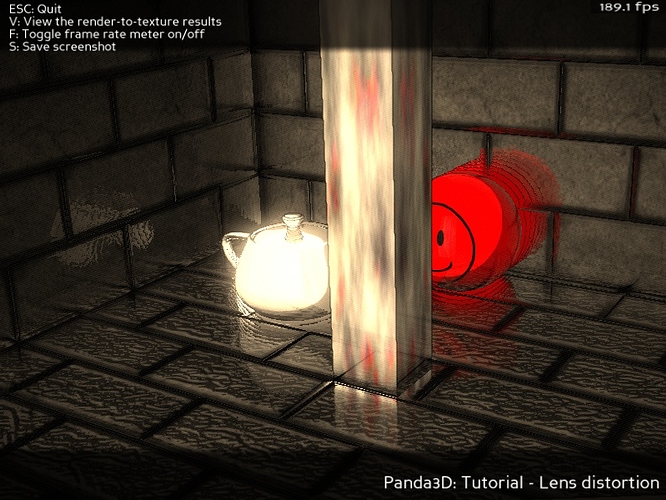

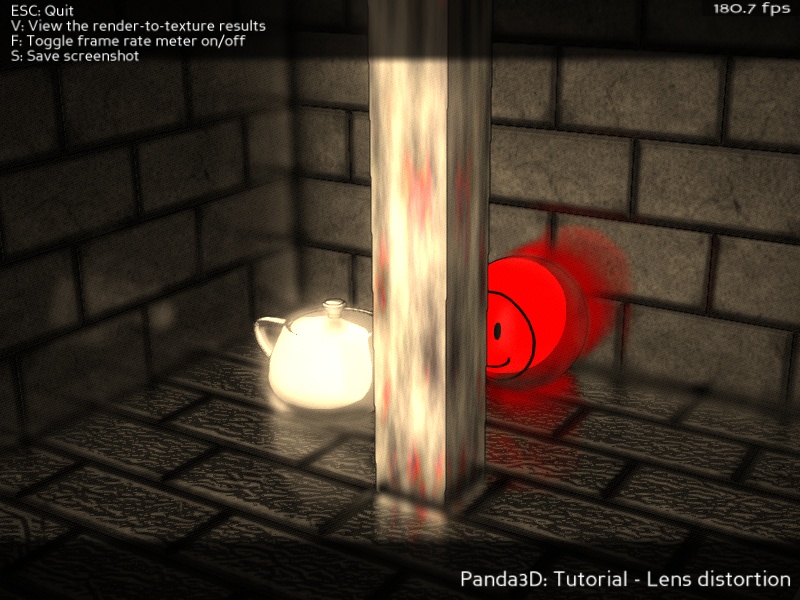

The screenshots were rendered with the following filters enabled: FXAA, CartoonInkThin (it’s a cartoon teapot), SSLR, SSAO, Bloom, Desaturation (with hue bandpass), Vignetting, FilmNoise, and Cutout (smoothed, partly translucent black bars). The test scene is by ninth; only the direction and intensity of the directional light has been changed.

EDIT: added missing setLocalReflection()/delLocalReflection() to CommonFilters.py (old API).

CommonFilters190_with_SSLR.zip (418 KB)