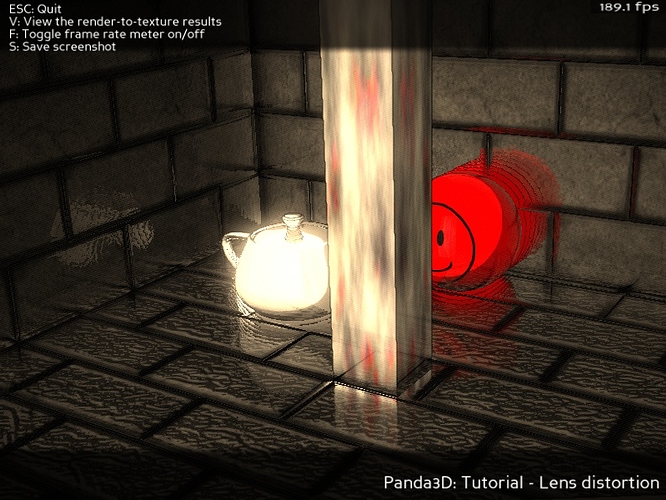

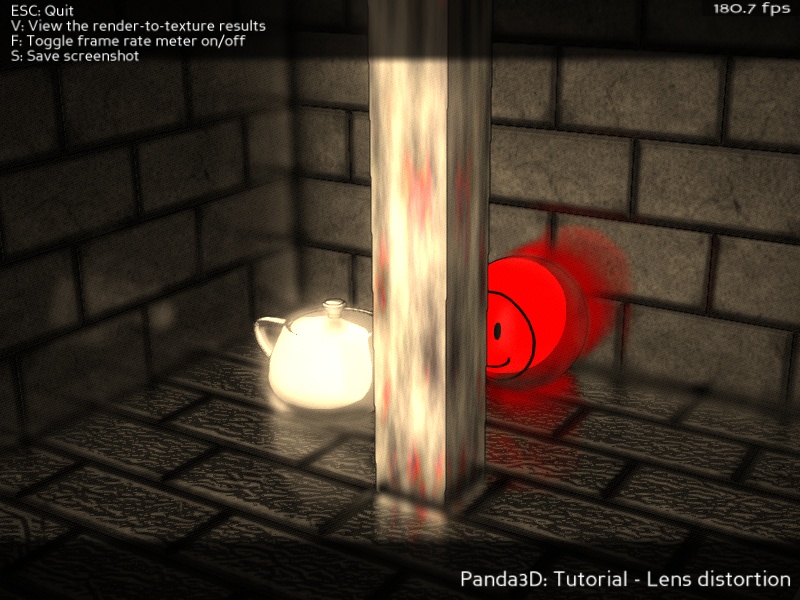

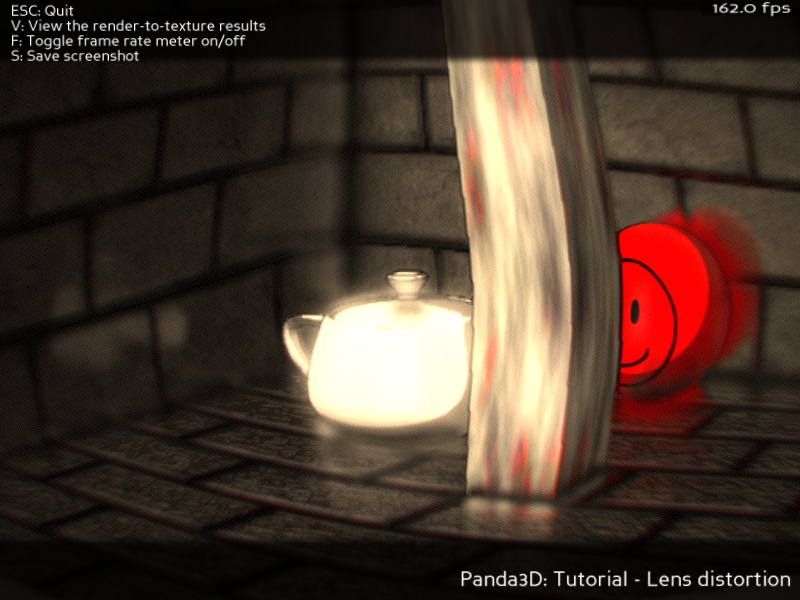

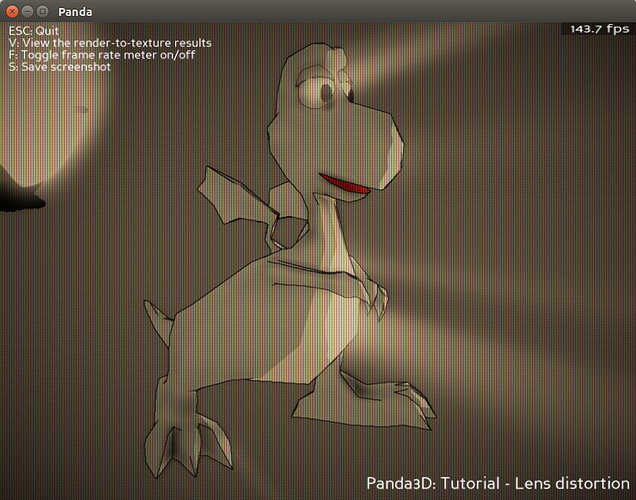

Attached is a version with the new filters included. Introducing (in alphabetical order) CartoonInkThick, CartoonInkThin, Desaturation, LensDistortion and LensFlare.

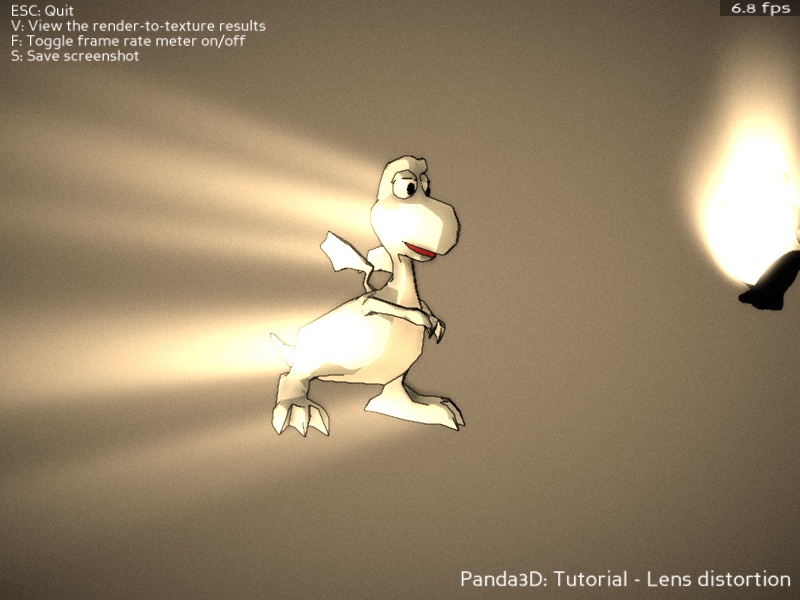

Here are some test screenshots of radioactive cartoon animals (bad framerate because the GPU is only an Intel Ironlake Mobile):

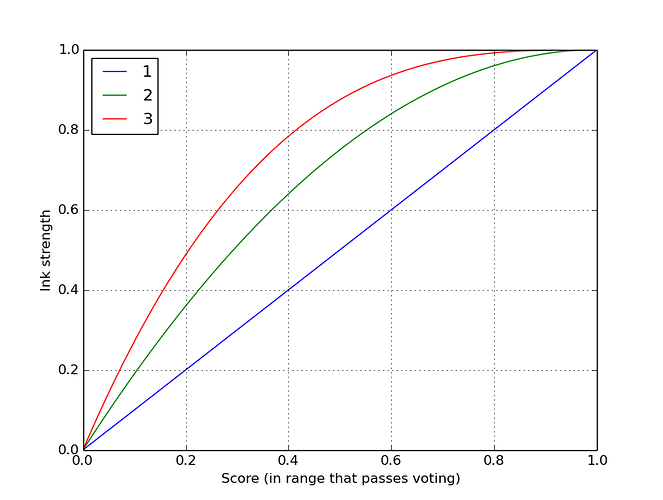

The two new cartoon ink filters are intended to replace the old one in new scripts; the old one is still provided for backward compatibility. The new filters are based on two different algorithms, and produce different-looking results, so both are included. (The screenshots here were made using CartoonInkThin.)

It turns out that the last posted version of the framework was sufficient for all of the new filters. Of the filters so far, the most demanding regarding framework features was VolumetricLighting with its “source” parameter.

So, now it’s only for the final cleanup before the overhaul is done, unless any new points are brought up in review.

The current status is that I’ve updated some of the docstrings in the framework, but not yet all of them. Also, I haven’t yet decided the final names for setup() (both variants), make() and synthesize().

I think I will make the final VolumetricLighting into a CompoundFilter to facilitate ease of use; what is currently called VolumetricLighting will become VolumetricLightingCompositor or some such.

EDIT: fixed a small input handling bug in the code. New version attached.

CommonFilters190_withnewfilters.zip (215 KB)