Recently, I had some ideas for improving the cartoon shader, as mentioned here:

I now have a working prototype. What I’ve added is:

-

Smoothed light/dark boundaries in light ramping. In addition to sharp thresholding as previously, a linearly interpolated transition is now supported in both single and double threshold modes. The length of the interpolation range can be adjusted (separately for both transitions in double threshold mode). This exploits the continuity of lighting values on smooth surfaces, and is not the proper way to antialias the boundaries under arbitrary circumstances, but in practice it seems to give satisfactory results (at least in my tests).

-

Light ramping now optionally affects the specular lighting contribution, too. The threshold/level/smoothing parameters for the specular component can be adjusted separately. New versions of make_single_threshold() and make_double_threshold() have been added to accommodate this. This feature is mainly useful for anime style hair.

-

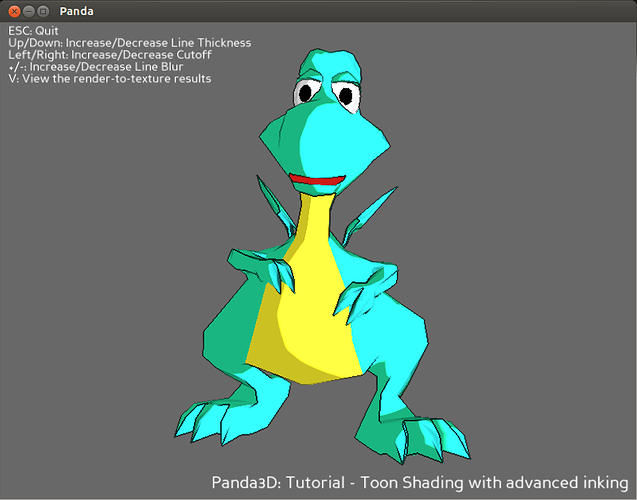

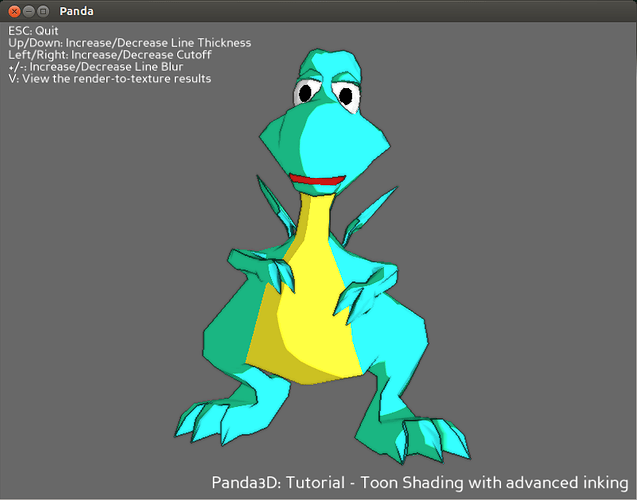

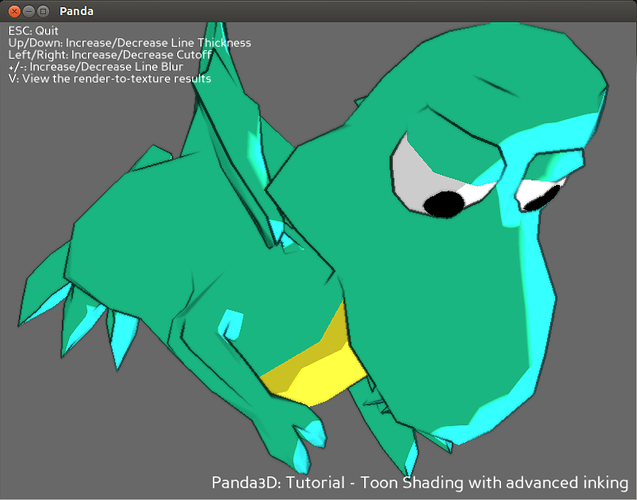

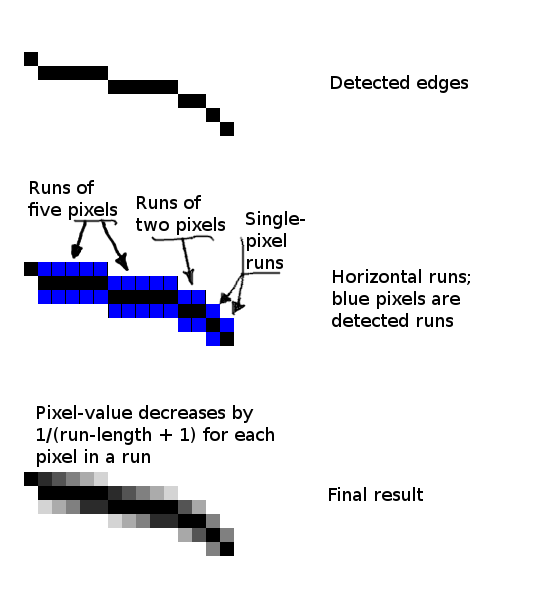

An “advanced ink” filter has been added to CommonFilters.py. It is based on the existing inker, but it has some new features. It accounts for the distance of each pixel from the camera (nearer = larger separation parameter → thicker line), smooths the lines using a blur filter, and optionally the inking can be rendered into a double-sized buffer to reduce artifacts at nearly horizontal or vertical edges. (This last solution is not completely satisfactory, so I’m not sure yet whether to keep that feature or not.)

-

The “cutoff” parameter of Tut-Cartoon-Advanced.py is now integrated into the inker in CommonFIlters.py. It wasn’t many lines, and I found it odd that this potentially useful feature was missing.

Also, I’ve fixed the two bugs I found:

-

Fixed the double threshold light ramp ( bugs.launchpad.net/panda3d/+bug/1214782 )

-

Fixed a shader generator error with some material property combinations ( bugs.launchpad.net/panda3d/+bug/1219422 )

At this point, I have some questions:

-

Are you guys interested in all/some/any of these changes? If some of these changes are considered potentially useful to other Panda3D users, I would like to work toward getting them integrated in the official source.

-

What is the proper protocol for posting source code? Attach a patch here?

-

In case there is interest, what is the procedure for code review?

-

Is there a way to post pictures? I’d like to share some images highlighting the modifications.