I am trying to make a camera coverage simulation where I need to find out what volume of the free space in my environment is effectively visible by my camera. My initial plan was to use the directional light to mimic the camera and see what portion of the environment is illuminated by the light source. However, I need to calculate the volume that is illuminated by this directed light source and there are also some objects that can block the light in my virtual environment. I don’t know how to calculate the volume illuminated by the light ray in panda3d. So, If any of panda3d practitioners have ideas on how to do that, I need some suggestions on calculating the volume illuminated by the light source.

Hmm… My instinct is to render the depth-buffer to an off-screen texture, and to then use that to calculate distances and thus volumes.

A naive approach might be to render this as usual, and to then treat each pixel as a tiny frustum, with length given by the depth-buffer. Since you know the image-size and the number of pixels, you can calculate the width and height of a pixel-frustum frustum at its near-end. From this it should then be possible to calculate the volume of the frustum.

Now, as I said, this is a naive approach. If a reasonable estimate is acceptable, you might perhaps perform a second render at lower resolution, and then use the resulting larger pixels for the frustum calculation. This should produce a volume of lower accuracy, but potentially do so rather more quickly.

Is there any efficient way to create voxelization of my room 3D model in panda3D?

I’ve not worked with voxels myself that I recall, so I don’t know, I’m afraid. :/

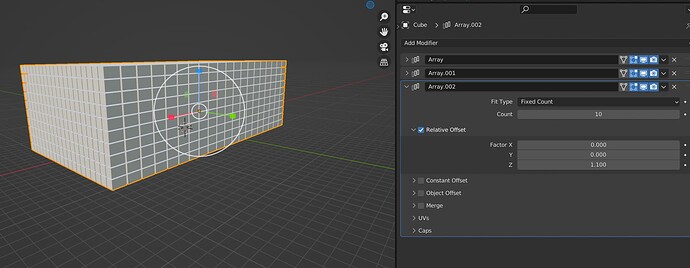

I made a stack of cubes in blenders.

So, What I want to do is load this object to panda3D and treat each cubes as individual node such that I can do ray collision on them and develop logic for each cube individually.

But, When i upload this model to panda3D, It will be one big node. How can I treat each small cube as individual node in panda3D? Sorry if it is too basic question, I am quite new to panda3D.

Also, I saw that we can divide one big cube into small cube using octree but can’t find good resources to do so. Can you also guide me on using octree in panda3D?

The problem is that you have to implement the generation of this array of cubes, procedurally. Which will give you the opportunity to assign and configure collision bodies separately.

As for octree, it’s just a class that contains a reference to the parent and its children, as well as the data it stores, in your case it will be geometry, namely cubes. It doesn’t depend on the engine, you should only use python.

While you should indeed find a single node on loading the object, you should also find that the node in question has your individual nodes as its children.

Thus you should be able to access your nodes either via calls to “getChild(node-index)”, or to “find(node-name)”. Something like this:

myModel = loader.loadModel("model")

# Get the first and fifth child-node of the model:

cube1 = myModel.getChild(0)

cube5 = myModel.getChild(4)

# Get the child-node of the model that has the name "someCube":

cube = myModel.find("**/someCube")

For more information on searching via the “find” method, see this manual page.

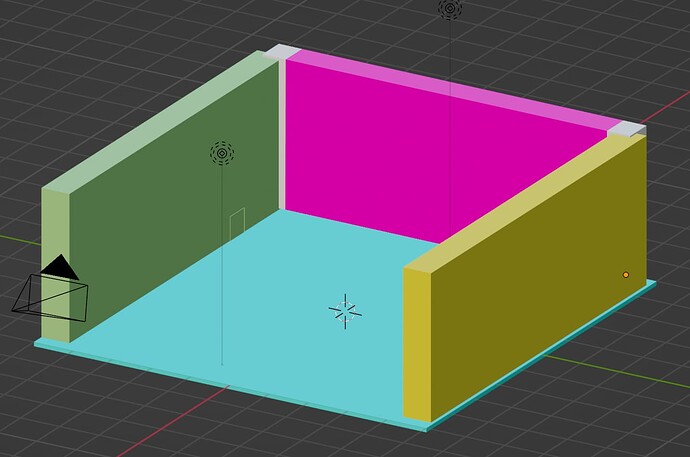

Thank you for the tutorials. I now made a simple room model in blender and divided it into small cubes such that the I can treat them as a voxel. I looked through the manul page to learn about Collision Segment as I am trying to mimic a camera and CollisionSegment is best to define Field of View(FOV).

The thing is, My camera object will have many collisionSegment that will be projected towards the room. I read in panda3D manual page that we need to attach the collision object to our model object in order to simulate the collision.

1.How can I do that in order to use CollisionSegment?

2.Can you guide me to mimic the camera object that will contain hundreds of collisionSegments that will have projects towards the room?

3. When I loaded this model as a bam file in panda3D, The orientation was not like in the blender? I read through few threads in this discussion about orientation, but still confused on how to set it right? Do you have any tutorial that explains the orientation in panda3D for the model that is imported from blender?

PS: The 3D structure shown above is made up of small cubes. Each walls is made up of 10x 6 cubes that I will treat as voxels while simulating the Collision.

When you create a collision object, you generally create a CollisionNode which contains whatever collision-solid you’re using (in this case, one or more CollisionSegments), and attach that node to the scene-graph as with any other node.

That said, you should find an example that’s pretty close to what you’re trying to do on the following manual page:

https://docs.panda3d.org/1.10/python/programming/collision-detection/clicking-on-3d-objects

(But if you’re concerned with performance, then I strongly recommend that you don’t follow that page in applying “GeomNode.getDefaultCollideMask()”. That enables collision with visible geometry, which is I believe significantly slower than collision with dedicated collision geometry.)

I would think that it would be much as the above-linked manual page shows, but with a great many CollisionSegments instead of one CollisionRay.

That said… Let me add a note of warning here: I do fear that this approach will prove very slow. You’re asking the collision system to do an awful lot of work, and are adding to your scene quite a few nodes, which might slow things down.

I do think that it might nevertheless be worth a try, and I may well be proven mistaken. But I do want to have that note of warning said.

Hmm, that’s curious. How are you exporting your object from Blender?

I used blend2bam python library to do that.

Ah, fair enough. I don’t use and am not experienced in that tool, so I don’t have much insight to offer, I fear. I’ll thus leave this point for others to hopefully answer, then, with my apologies!