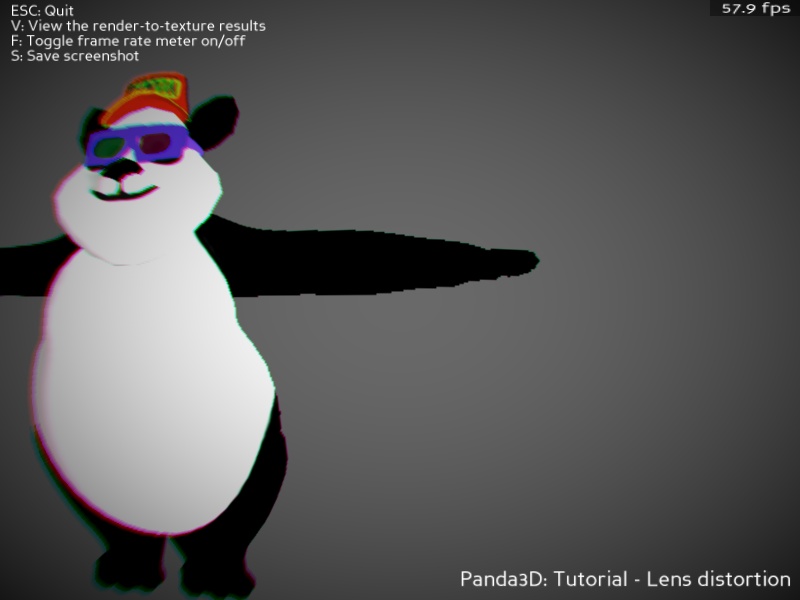

In the meantime while I’m working on the new CommonFilters architecture, here are screenshots from one more upcoming filter: lens distortion.

The filter supports barrel/pincushion distortion, chromatic aberration and vignetting. Optionally, the barrel/pincushion distortion can also radially blur the image to simulate a low-quality lens.

This filter will be available once the architecture changes are done.