Welcome 3D artists and Panda3D developers! Let’s build a 10 minute interactive real time demo together which shows off how cool Panda3D really is. Our goal is to have fun and build something truly cool to get more artists and developers using and developing Panda3D.

First Major Thematic Update

We’re moving from “hostile aliens” to non-sentient robots (as we determine) for the shooter segments.

Outline:

Section 1: Multiple Perspective Intro with Procedural Generation of a Starship

Section 2: Flying the Starship to a Space Station

Section 3: First Person Shooter (or “Sneaker”) Trek through a Space Station

Section 4: Portal into Space, Blow Up the Space Station (or don’t), and Fly Away

Section 1 Details:

Original Inspiration from @Epihaius :

So, we have a 1. Large Futuristic Hangar with a Large Futuristic Starship . Our camera perspective could be in a similar vein to Signal Ops Signal Ops - A game that will multiply your perspective. in that we have multiple perspectives that we can switch between. It need not be exactly like Signal Ops, maybe we just press Arrow Right or Arrow Left to switch between “security camera views” as the starship is being constructed by robots.

Section 1 Initial Requirements:

- Very high quality starship 3D model

- Somewhat sophisticated procedural generation code

- Very high quality hangar 3D models

Section 2 Details:

We will probably want some obvious entry of the player character into the finished starship. I would not suggest going too deep on the character development here, as it could quickly get out of scope. I suggest the player character model comes in from a door somewhere inside the hangar, and is directed in 3rd person perspective up a ramp into the starship cockpit. After sitting down, the camera is in first-person perspective, and the player flies the starship out of the hangar and into space.

Section 2 Initial Requirements:

- Fully rendered starship interior

- Outer space environment (probably just a skybox)

- Mothership / port hangar to launch from

Section 3 Details:

The player docks the starship in first-person perspective to the space station. The player egresses from the vehicle through a docking tunnel and enters the space station. The player is immediately greeted with hostile robots (good thing we brought our pilot’s sidearm). *Alternative playthrough: Sneak with slightly increased difficulty.

Section 3 Initial Requirements:

- Very high quality space station exterior 3D model

- Very high quality space station interior 3D models

- Hostile robot models, let’s say 3 NPC variants

- Very high quality pistol 3D model, maybe 1 or two high quality weapons found while working your way through the station

Section 3 Updates:

- Our project goal on this section has been updated to include the following:

Section 4 Details:

The hostile forces become overwhelming. Luckily, the player discovers a way to portal out into space, if only they can find a spacesuit. The player discovers the spacesuit and opens a portal directly into space, taking a self-destruct remote detonator with them. After portaling into open space, the player presses the detonator and the space station explodes in a spectacular VFX fashion. *Alternative playthrough: Don’t detonate the station, and fly away the same. The starship, on autopilot, comes and picks up the player. The game is now in the win condition. Fade to black.

Section 4 Initial Requirements:

- Very high quality space suit model

- Very high quality detonator model

- Portal programming magic (which has been demonstrated in Panda before)

- Space station explosion VFX

Audio Requirements

- Ambient soundtrack (space station ambiance for instance)

- Sci-fi/fantasy gun sounds

- Hostile robot noises

- Starship noises, flight sounds

- Music!

Further Details

This is probably the most ambitious project ever conceived of for the Panda3D development community. It will require full commitment of at least several people with great skill in different areas. “Programmer Art” will not be sufficient. We need professional quality 3D assets. Let’s discuss how this is achieved. I would like to commit $200 of my own money as an act of good faith once we have very well-defined asset goals in place for the right 3D artist/artists.

Progress so far:

Most recent updates:

In just a few weeks of development, we have made several core contributions:

Our GitHub page for collaborators:

To contribute code and 3D models, it is recommended to Fork the specific P3D Space Tech Demo repository to your GitHub profile, and then submit Pull requests to us for review. We’re happy to receive contributions at this early stage.

Some early graphics:

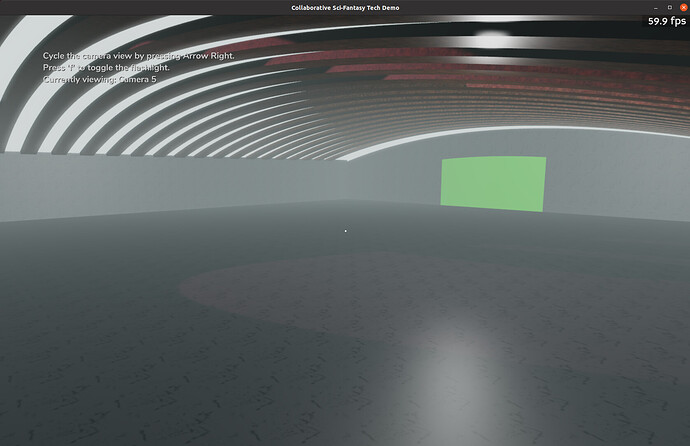

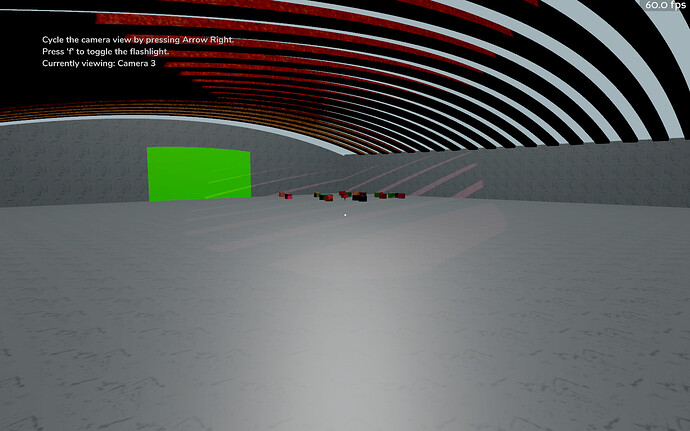

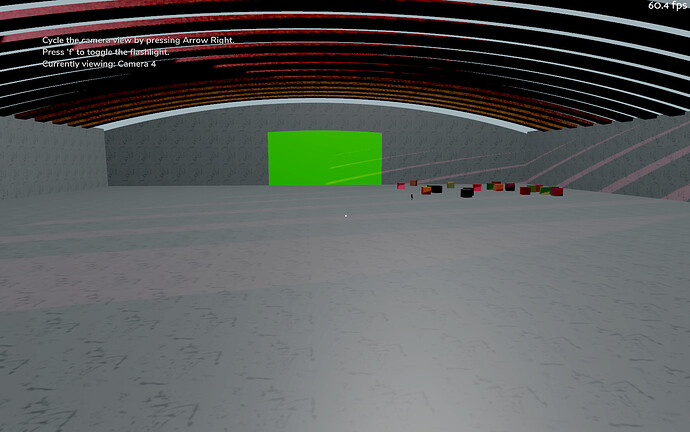

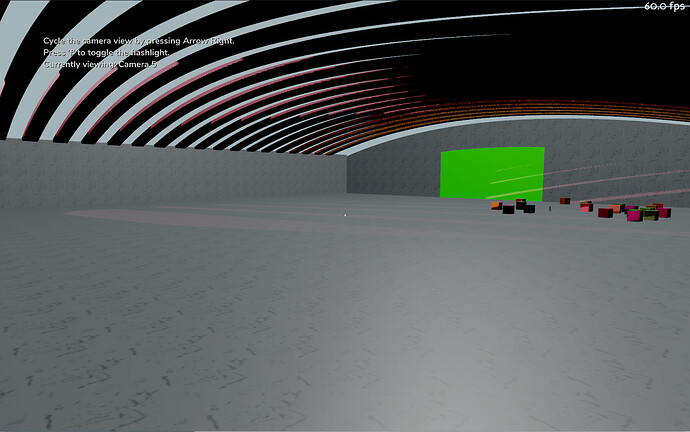

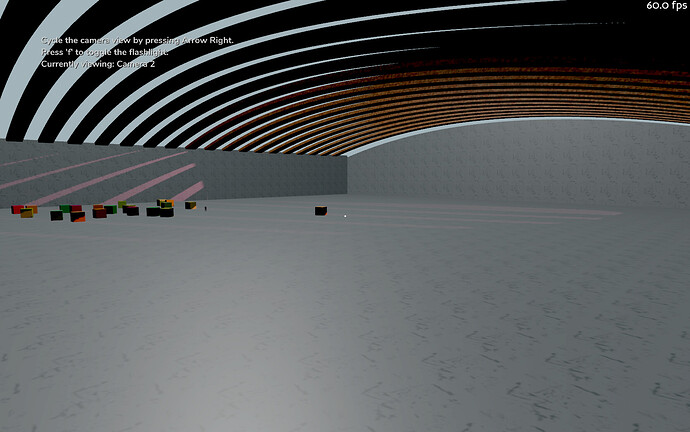

The initial hangar:

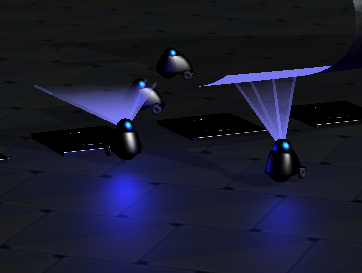

Initial portal programming demo:

With a ramp: